Local search engine listings may be little more than an afterthought to some webmasters, but they are a source of business that you shouldn’t ignore. Optimizing your site for local searches and making sure you’re listed in the local versions of the major search engines is a smart move, and doing so is fairly quick and easy. The three biggies in local search are Google Local, Yahoo! Local, and Bing Local.

Just to get everyone on the same page, let’s review the concepts behind Google AdWords, specifically the concept of “pay per click,” or PPC. PPC is an advertising model. If you’re an advertiser, you pay the site hosting your ad only when somebody clicks the ad. To buy search engine ads, advertisers bid on relevant keyword phrases, whereas content sites usually charge a fixed price per click. Cost per click is how much the advertiser pays each time someone clicks the ad. So if a host site doesn’t generate any click-throughs, the merchant incurs no cost. Sites selling PPC ads display certain ads that are relevant to keyword queries on an advertiser’s keyword list.

The Google AdWords Conversion Tracking Guide is a PDF guide you should at least bookmark, or maybe print out for reference. Conversion tracking works like this. It places a cookie on the computer of the user who clicks on your ad. If the user then reaches one of your conversion pages, the user’s browser sends the cookie to one of the Google servers. A conversion tracking image will be shown on your website. When this match is made, it’s considered a successful conversion. For example, see the ad for Klogzilla.com in the screen shot? If someone were to click on that ad, Klogzilla would have to pay Google a certain amount based on what keyword brought the person there. Some percent of that money would be shared with the website owner. If Klogzilla had the requisite tracking code in their website HTML, they could track these things.

The Google AdWords Conversion Tracking Guide is a PDF guide you should at least bookmark, or maybe print out for reference. Conversion tracking works like this. It places a cookie on the computer of the user who clicks on your ad. If the user then reaches one of your conversion pages, the user’s browser sends the cookie to one of the Google servers. A conversion tracking image will be shown on your website. When this match is made, it’s considered a successful conversion. For example, see the ad for Klogzilla.com in the screen shot? If someone were to click on that ad, Klogzilla would have to pay Google a certain amount based on what keyword brought the person there. Some percent of that money would be shared with the website owner. If Klogzilla had the requisite tracking code in their website HTML, they could track these things.

To do conversion tracking, you place a few pre-written lines of code into your website’s HTML. With AdWords, you need access to your website’s code and your Google AdWords account. The Tracking Guide referenced above offers instructions for the insertion of the lines of code into these web programming languages:

One hour (give or take) after you’ve successfully installed the code into your site, you can see your conversion tracking reports from your AdWords Campaign Summary page and Report Center. You can look at conversion reports for individual keywords and key phrases too. Statistics on users who click one of your Google AdWords ads and completes a specified conversion, such as a sign-up, a page view, or a purchase, can be seen in your AdWords reports. You get the snippet of code when you sign up from the AdWords Conversion Tracking Page, under the Campaign Management tab.

As Magnum, P.I. used to say, I know what you’re thinking, and you’re right. This can rapidly become complicated. As if you weren’t spending enough hours running your business and updating your website, now if you want to figure out where your AdWords dollars are doing their best, there’s all this tracking to deal with.

Well, there are search engine marketing firms and AdWords account management consultants that can do this stuff for you. The idea is that they know how to manage your AdWords campaigns so that they reach the people who want to buy your product or service. The Google Advertising Professionals program certifies those who pass a qualifying exam and meet other qualifictions.

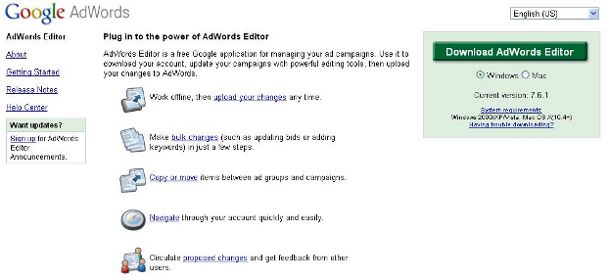

If your budget doesn’t run to hiring consultants yet, Google also provides its own software for AdWords account management called AdWords Editor, which you can see in the screen shot. Access it at http://www.google.com/intl/en/adwordseditor/. You can also sign up for something called My Client Center, which is part of the Google Professionals suite, but which you can use even if you’re not a certified Google Advertising Professional. It’s a shell account that links up all your AdWords accounts in one location, so you don’t have to log in and out to switch AdWords accounts. This is great for the advertising professional who manages AdWords accounts for several clients too. You can generate multiple accounts at once, invite new clients to have their accounts managed by you, and generally have dashboard access to your or your client’s AdWord account(s).

To be able to use this, go to the Google Advertising Professionals sign-up page at https://adwords.google.com/professionals/ and sign up for free. You’ll be given a My Clent Account setup as soon as you enroll.

In fact, if you have time (I know, fat chance, right?), signing up with the Google Advertising Professionals program and gradually working your way through it will make you an expert on pay per click management of AdWords accounts and help you use your AdWords dollars optimally.

The fundamentals of SEO apply regardless of which search engine you want to rank in, but each of the major ones has some finer points, so let’s get right to them.

You may have heard that participating in Google AdWords would penalize your site in other search engines, but Yahoo! insists that this is not the case. They really have nothing to gain by dropping sites that are relevant, and if they did, they’d be cutting off their nose to spite their face. But the age of your domain is important because a longer track record ups a site’s relevancy. It’s only one score, and it’s one you can’t do much about, but it’s something to keep in mind: SEO is a long term idea.

Yahoo! suggests registering domains for more than one year at a time. It gives your site a long-term focus and keeps you from accidentally losing a domain because you didn’t find the email that it was time to renew. They also suggest you should buy the same domain name with and without dashes. This will keep you from losing so-called type-in traffic. However, all SEO pundits say that the more dashes, the harder it is to type in, and the more spam-like it will look, so don’t get carried away.

Like with Google, with Yahoo!, relevant inbound links from high quality pages are gold. This is one of the hardest parts of SEO, but it’s one where there really aren’t many shortcuts. You want links from sites that belong to the same general neighborhood of topics as yours. If you have a site that sells organic flour, a link from a fishing tackle site isn’t going to help you much, if at all. And when it comes to giving out your own links, be careful here as well. If you link to a lot of sites that are or could be penalized, you could be hurting yourself by association. If you sell text links, check out every site that buys from you to make sure you’re not endorsing spam, porn, or other content that search engines frown upon.

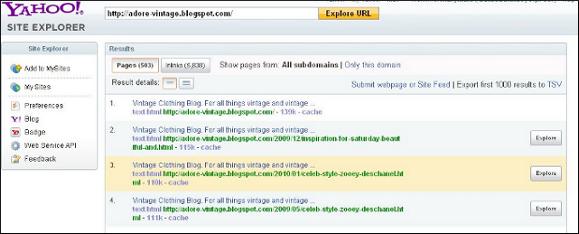

Make use of Yahoo Site Explorer to see how many pages are indexed and to track the inbound links to your site. The first screen shot shows the results of the analysis of one site. As you can see, there are 503 pages on the site, and 5,838 inlinks, each of which you can explore further. To maximize crawling of your site and indexing of pages, publishing fresh, high quality content is the key.

There have been some case studies about what Bing looks at compared to Yahoo! and Google when ranking sites. When ranking for a keyword phrase, both Bing and Google look at the title tag 100% of the time. Prominence is given a little more weight with Bing than with Google, while Google favors link density and link prominence more than Bing. Bing evaluates H1 tags, while Google does not, and Google considers meta keywords and description while Bing does not. What that all boils down to with Bing is that having an older domain and having inbound links from sites that include the primary keyword in their title tags (another way of saying relevant inbound links) are keys to optimizing for Bing. Like optimizing for the other major search engines, link building should be a regular, steady part of your SEO effort.

With Bing, it’s easier to compete for broad terms. With Bing, keyword searches result in Quick Tabs that offer variations on the parent keyword. This has the effect of bringing to the surface websites that rank for those keyword combinations. The goal is for content-rich sites to convert better than sites with less relevant content. The multi-threaded SERP design brings up more pages associated with the primary keywords than would come up with a single-thread SERP list. Also, Bing takes away duplicate results from categorized result lists. This allows lower ranked pages to be shown in the categorized results.

The Bing screen shot shows the results for a search on “video cameras.” To the left is a column of subcategories. Results from those subcategories are listed below the main search results. While there are some differences to SEO for Bing, the relatively new search engine isn’t a game changer when it comes to SEO.

It sometimes seems as if SEO is synonymous with “SEO for Google,” since Google is the top search engine. And it also seems that when it comes to SEO for Google, a lot of the conventional wisdom has to do with not displeasing the Google search engine gods by doing things like cloaking, buying links, etc.

The positive steps toward SEO with Google include keywords in content and in tags, good inbound links, good outbound links (to a lesser extent), site age, and top level domain (with .gov, .edu, and .org getting the most props). Negative factors included all-image or Flash content, affiliate sites with little content, keyword stuffing, and stealing content from other sites. It isn’t so much that Google wants to seek out an destroy sites that buy links, but they want the sites with actual relevant, fresh content to have a shot at the top, and with some sites trying to game the system and get there dishonestly, Google has to find a way to deal with these sites without hurting the good sites.

In fact, Google wants users to report sites that are trying to cheat to get to the top of the search engines. On the screen shot, you can see a copy of the form found at https://www.google.com/webmasters/tools/spamreport?pli=1 for reporting deceptive practices. You have to be signed in to your Google account to use this, by the way. They want to get away from anonymous spam reports.

Just over a year ago, Google made some changes in their algorithm that appeared to be giving weight to “brands.” Now, “brand” is a short word that’s loaded with a lot of meaning. When this change took place, there were plenty of people running around in circles waving their arms in the air thinking that the big, rich corporations were going to dominate the search engine rankings and the whole purpose of organic search would be lost forever (the purpose being bringing users relevant results that could be from anyone, anywhere if they were right on target).

Fortunately, the passage of time has let people make some more considered analyses of that change. To get up to speed, let’s take a quick look at some of the ancient history (dating back to 2003) in search engine technology.

![]()

Education specialists will tell you that some of us learn more readily from reading, some from hearing, some from video, and some from doing. It only makes sense that when you conduct an internet search, you avail yourself of all the options when it comes to the information out there on the subject you’re interested in. That’s one reason why multimedia searching is a good idea.

Another reason is that sometimes the best information is something other than a written web page. For example, if you were to find a web page describing a newly discovered piece of music by one of the great composers of the past, the description would no doubt be interesting, but you could really appreciate it better if someone had posted a recording of someone playing the composition.

Likewise with video. While it can be thrilling to read a news story about a very close speed skating finish at the Olympics, you can experience it better if you watch a video of it.

When it comes to using multimedia to improve your search engine rankings, there are a number of ways to do it. You can post your own video content, or you can embed relevant video onto your page.

Depending on who’s doing the talking, Google’s new Enhanced Local Listings (currently available only in Houston, Texas and San Jose, California) are either a boon to small businesses with small advertising budgets or the end of organic search as we know it. Here is what all the heated discussion is about.

The Google Lat Long Blog describes it as “a new ads feature in local search that allows business owners to enhance their listings.” Apparently, it is not a matter of “buying position” on the SERP: “When the listing shows up in your Google.com or Google Maps search results, the enhancement also appears alongside it.”

The “enhancement” as you can see in the screen shot is a little yellow flag. Your listing will also have “sponsored” next to it. The enhancement is something you can click on to go to the business’s website, pictures, menu, or coupon. On the screen shot you can see that the flag will take you to the company’s website.

This service costs $25 per month and according to Google, it does not affect your search engine rankings. It only shows up beside your listing where your listing would appear anyway. So what are the pluses and minuses of this program? Forums have been thrashing it out the past couple of days. The two main arguments are: 1) It could help small businesses who don’t have $1000 or so a month for an effective AdWords account and 2) Just you wait: this will result in purchasing rank eventually and organic search as we know it on Google will be over. Let’s take the arguments in turn.

OK, if you’re a small business, you probably don’t have the money or the time to plan and execute a competitive ad plan on AdWords, and the return on investment of a smaller AdWords account isn’t always worth the trouble or cost. And even small businesses can fork over $25 to highlight their ad and include a link to a coupon, menu, photos, or just the website. Maybe it would be good to run for a month while they had a special promotion going on. There’s little question that Google’s bottom line will benefit. According to a Chantilly, Va. local business advertising company called BIA / Kelsey, advertising for small businesses is a $29 billion market. Those $25 flags will add up fast if Google rolls the program out nationwide.

Google says that it is thinking about taking the program nationally, but they don’t know exactly when. Businesses in San Jose and Houston can get into the program and get an enhanced listing with a little yellow flag and link for free for the rest of February. In March, it will start costing $25 per month. Businesses that don’t rank won’t really get anything for their $25, so local SEO will still be important.

If you have a small business who’s claimed the local Google listing for your site, whether you buy an enhanced listing or not you get something called dashboard analytics that will tell you when a visitor hits your local maps-based listing where they go: to your website, directions to your place, or your Google Maps record.

There are plenty of people, though, who see this as a slippery slope to paid rankings. Some of them believe that as the program grows, it will become competitive (like AdWords), and the position will be determined by the highest bids. Others think that if the program is nationwide, everyone will pay $25 per month and the enhanced listings will no longer stand out in a sea of little yellow flags.

Then there are those who believe that now Google has its big toe in the door, eventually there will be a more complex structure for price and what it gets you and after people get used to it, paying for real estate on page 1 of the SERPs will have worked its way into the mix without anyone thinking its any big deal. Boom: the end of Google’s organic search results. With AdWords already putting a price on 10 to 20% of the space on each SERP, Google will eventually want the other 80 to 90% to be monetized too. Other conjecture for Google’s nefarious plan include offering something like number one placement with purchase of a “premium” package.

The most likely scenario is that the program will roll out nationwide, everyone will pay $25 for a flag, and therefore nobody’s listing will stand out (except, ironically, maybe the oddball who ranks and doesn’t buy the enhanced listing). Pay for rank? I don’t know. It seems as if Google has an awful lot to lose by doing that. I suppose it is possible that Google thinks it’s so big and dominates search engines so thoroughly that they could pretty much do what they want and get away with it.

But if that were the case, it would make the time ripe for a new or open-source search engine to bust out due to its simple interface and truly organic listings. Or else Google could separate their search into something like “New Coke” (where businesses can buy rank) and “Classic Coke” (where the results are pure and ads are either gone or could be turned off). That would allow those who actually care about relevance and quality to do their thing while the ones that were OK with buying ranking could have their own search universe too.

If you’ve checked your gmail account in the past few days, then you’ve probably gone straight to a screen asking if you want to set up Google Buzz. If you’re worried about a complex set-up procedure, then stop worrying. It’s extraordinarily easy to set up because you’re walked through every step and it’s all very clear.

But perhaps before you get all gung-ho on what should be a new and easy way to tie together contacts and share social networking information, you should know up front that Google Buzz feels very “wide open,” and that’s because, well, it is. Google has already taken some heat from a blogger who found out the hard way that Buzz was revealing her present social networking information to her ex-husband because he is, for better or worse, one of her “most frequent” contacts. Your most frequent contacts are automatically allowed access to your Google Reader unless you explicitly block them. “Frequent” contacts are also those who email you frequently, whether you want them to or not.

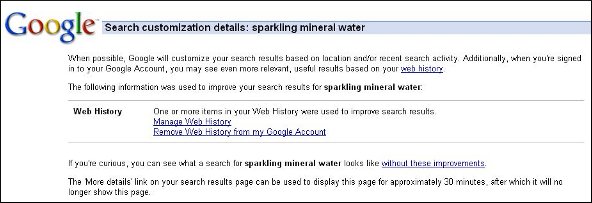

In December 2009 Google rolled out Personalized Search for users who were not signed in, and in over 40 languages. Personalized search has been around for awhile for signed in users who have web history enabled. This allows Google to fine-tune your search results based on past searches and on the sites you’ve clicked on in the past. This is how Google tries to optimize searches for you when your search terms have more than one meaning.

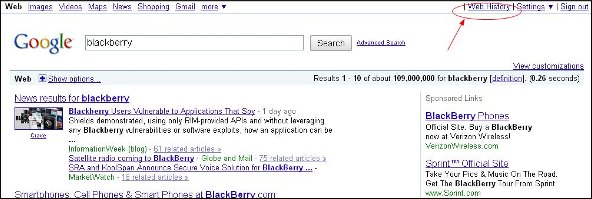

For example, Googling the term “blackberry” while signed in with web history on gets the results you see on the first screen shot. From my search history it is clear that I’m much more likely to be searching for information about the BlackBerry PDA than about the actual fruit. On the other hand, my mom, who does a lot of baking and jam-making, would probably end up with search results about the fruit rather than about the electronic device, based on her history of looking up recipes.

So now that personalized search is on offer for signed out users, exactly what does that mean?

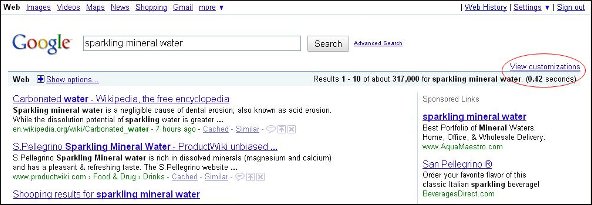

It means that personalized search can use an anonymous cookie on your browser to base your search results on 180 days of search activity linked to this cookie. This is completely separate and apart from your Web History and your Google account. When you have the option of personalized results while signed out, you will see a link that says View Customizations in the top right corner of the results page, as you can see in the second screen shot. When you click on it, you can see how the results are customized and, if you want, you can turn off this type of customization, as you can see in the third screen shot.

Now, there are obviously computers where lots of different people search, so the browser cookie might be influenced by multiple people’s search activity. To protect the privacy of the non-signed-in users, you can’t actually view the specific search activity upon which the signed-out personalized search is based. Plus, you can turn off personalized search settings for signed-out personalized search altogether if you want.

As for signed-in personalized search, you can clear the history upon which your personalized results are based at any time to protect your privacy. That way, if you stay signed in and someone else wants to know what you’ve been searching on, they won’t be able to do it through your personalized search history. Of course they could still go through your web history, so if you’re concerned, you should use your browser to clear your web history.

If you’re a webmaster, you might be wondering whether personalized search, adopted on a massive scale, will affect your ability to reach the people you want to visit your website. The answer, unsatisfactory as it may be, is “Yes, and no.”

Consider this. If a user searches for, say, “pith helmets,” and visits the top result and the last two results on the first page of listings. Then those three websites will be added into the person’s personalized search data. Next time the user decides to do a search for “pith helmets” then those two sites from the bottom of the first page of results will rank higher than they would in an organic, starting from scratch search.

But what if the user finds another search result, say the 7th one on the new results page, and bookmarks it and goes there henceforth rather than searching for pith helmets anymore for the time being. But then, a few months later, he thinks that maybe there’s a better pith helmet out there so he does another search. But this time, the one he bookmarked shows up as the top result. What gives?

Even though that site didn’t do any special SEO, there it is, right at the top of our pith helmet-loving searcher. However, other searchers will find other pith helmet dealers at the top of their results pages.

So how do you, the webmaster, change your SEO strategy? Or do you need to change it? If you’re doing legitimate, white-hat ways of getting traffic to your site, then your site will be more likely to bubble to the top of the research results for personalized search. A first time searcher might find your site perched at No. 1 simply because he has already visited it a bunch of times through other routes. This is true even if your site wouldn’t be tops in an organic search.

There’s nothing really that you should change in terms of your SEO strategy. Keep doing the on-site SEO as you have been, and you’ll probably do fine. But there’s nothing wrong with using off-site SEO to build up your brand and keep your visitors happy. Again, it boils down to having great content that people will find compelling and that will make them want to come back. This helps you whether the game is organic search or personalized search.

The takeaway is this: Google personalized search isn’t so much revolutionary as evolutionary. It’s not going to take all the search engine results and shuffle them massively. It may mean that sites that focus on the mechanics of SEO without focusing on great content could lose some ranking, but even that seems unlikely. Personalized search will change things up a little on an individual basis, but it by no means throws out the concept of organic search results based on SEO.

Adwords calculates a Quality Score for every one of your keywords. The Quality Score is a measure of how relevant the keyword is to the text in your ad and users’ search queries. It is determined by a variety of factors. The Quality Score for a given keyword updates frequently to reflect its performance. Generally speaking, the higher the Quality Score, the higher the position at which your keyword activates ads, at a lower CPC (cost per click). Sounds like you want your Quality Scores to be as high as possible, right?

Something that can be very helpful when you are designing and refining your website is knowing what it “looks like” to the bots that crawl the web and index your pages. If your site doesn’t have the information that the bots need to know what your content and graphics are all about, then they can’t do a very good job indexing your pages.

If you use Firefox, you can download and install the “User Agent Switcher” option for Firefox. You’ll have to restart Firefox once you’ve installed it. Once you have it, in Firefox, go to Tools, then User Agent Switcher, then Options, then Options again. In the User Agent Switcher window that comes up, select User Agents and click on “Add.”

In the Description box, type something like “Google Bot” and in the User Agent box, paste this:

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

In the App Name box type Googlebot, then click OK. Now, any time you want to view one of your pages as if you were the Google bot, you go to Tools, User Agent Switcher, Googlebot.

You might have to block cookies to view some sites, and you can do this in Tools, Options, Privacy, Exceptions (then add the URL).

Another thing you can do is to use a text browser like Lynx to get a rough estimate as to how your site looks to Google. Google Webmaster Tools, however, has a feature that can help too. On the Webmaster Tools dashboard, click on the “+” sign by the “Labs” link in the left hand column. When you do, you’ll see an option called “Fetch as Googlebot” as you can see in the first screen shot. Click on it, and it will download your site (or whatever URL you enter) as the Googlebot sees it.

As in the second screen shot, you’ll see the html source just like that you’ll see when you click on “View Source” in your browser. You’ll get a response code, like 200, which means everything is peachy, or 301, which means “permanent redirect.” You’ll see what kind of server your website is on and any CSS files or scripts that are called upon and included.

One caveat, however is that it doesn’t always work with PDF files, but Google insists it’s working on fixing the problem and if your sites look OK in your browser, chances are it looks OK to the Googlebot (even if it’s PDF).

If you run a lot of scripts or have lots of layers on your sites, this can be particularly handy. If your site is mostly simple html, your normal web browser will give you a pretty good idea of what Google sees on your site.

When the Googlebot crawls your site, it uses computer algorithms to determine which sites to crawl, how often to crawl them, and how many pages to get from each site. It starts with a list of URLs from earlier crawls and with sitemap data. The bot notes changes to existing sites, new sites, and dead links for the Google index. When the Googlebot processes each page it takes in content tags and things like ALT attributes and title tags. Googlebot can process a lot of content types, but not all. It cannot process contents of some dynamic pages or rich media files.

When the Googlebot crawls your site, it uses computer algorithms to determine which sites to crawl, how often to crawl them, and how many pages to get from each site. It starts with a list of URLs from earlier crawls and with sitemap data. The bot notes changes to existing sites, new sites, and dead links for the Google index. When the Googlebot processes each page it takes in content tags and things like ALT attributes and title tags. Googlebot can process a lot of content types, but not all. It cannot process contents of some dynamic pages or rich media files.

There has been plenty of talk about how to handle Flash on your site. Googlebot doesn’t cope well with Flash content and links that are contained within Flash elements. Google has made no secret about its dislike for Flash content, saying that it is too user-unfriendly and doesn’t render on devices like PDAs and phones.

You do have some options, however, such as replacing Flash elements with something more accessible like CSS/DHTML. Web design using “progressive enhancement,” where the site’s designs are layered, yet concatenated, will allow all users including the search bots to access content and functions. Amazon has a “Create your Own Ring” tool for designing engagement rings that is a good example of this type of functionality. Also, something called sIFR, or Scalable Inman Flash Replacement is an image replacement technique that uses CSS, Flash, or JavaScript to display any font in existence, even if it isn’t on the user’s computer, as long as the user can display Flash. Now, sIFR is officially approved by Google.

Google says that the bottom line is to show your users and Googlebot the same thing. Otherwise your site could look suspicious to the search algorithms. This rule takes care of a lot of potential problems, like the use of JavaScript redirects, cloaking, doorway pages, and hidden text, which Google strongly dislikes.

Google support engineers say that Google looks at the content inside “noscript” tags, but they should accurately reflect the Flash-based content included in the noscript tags, or else Googlebot may think it’s cloaking.

According to Google engineer Matt Cutts, it’s difficult to pull text from a Flash file, but they can do a fair job of it. They use the Search Engine SDK tool that comes from Adobe / Macromedia. Most search engines are expected to make that the standard for pulling text out of Flash graphics. People who regularly use Flash might consider getting that tool as well and seeing for themselves what kind of text it pulls out of your graphics. In fact, Google may work with Adobe on updates to the tool.