Not too long ago, Wired magazine ran an article by Chris Anderson and Michael Wolff entitled “The Web is Dead, Long Live the Internet.” In the introduction to that article the authors write, “Two decades after its birth, the World Wide Web is in decline, as simpler, sleeker services – think apps – are less about the searching and more about the getting.”

If this is true, finding your way to the top of the search engines won’t be quite so crucial, in terms of business success. The authors note that people may spend their entire day on the Internet and never really search the Web. Specific apps for email, social sites, news and business resources make it unnecessary to type and search.

Anderson and Wolff urge the skeptics among us not to toss this off as “a trivial distinction.” They call the new model “semi-closed platforms” that use the Internet as a transportation device. But there is no basic need for a browser to display pages. The bottom line is it’s “a world Google can’t crawl.”

What does it mean to rank #1 on the major search engines in 2010 and beyond? Is it as important as being the “big dog” a few years ago? Or, as some believe, is it not quite as important as in the past?

Off to the Races?

The year 2010 may be remembered as the period of time when use of the Web and Internet changed significantly. What change, you may well ask? It seems obvious that if the majority of people are starting to fine-tune their Web use to the point of using only a handful of specific apps, the key factor is going to be speed.

The year 2010 may be remembered as the period of time when use of the Web and Internet changed significantly. What change, you may well ask? It seems obvious that if the majority of people are starting to fine-tune their Web use to the point of using only a handful of specific apps, the key factor is going to be speed.

Several industry observers have started to focus on page-load speed as one of two or three major factors for Web site success. For example, professionals in this field are urging Web designers and administrators to offer thumbnails of images so that the visitor doesn’t have to wait for a full-size image to load. Give the viewer the option to select the larger image. It’s a simple step.

In keeping with this trend toward almost-instant gratification, Google has come forward to emphasize page speed as a key factor. After all, for Google to be successful millions of Web users around the world must continue to search. One of the primary “enemies” of the old search pattern is the mobile device.

As cell phones and other devices take on tasks formerly completed only on a powerful desk computer, the need to search will be in decline. Sites will be developed for mobile devices and for the specific applications that work best on the mobile device.

Take the Local

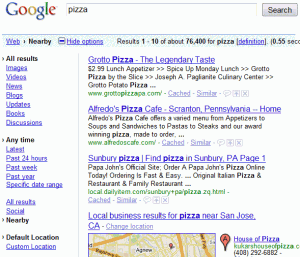

As we discuss this major change in Web use we should also consider this thing called the local search. In simple terms, being #1 worldwide will mean less if a viewer limits the search (and use) to fewer sites. For example, businesses of all types may start to limit their marketing to sectors such as Google Places or to Hotpot (interesting name). This last item is a ratings tool as well as a recommendation engine for Google Places. It’s designed to focus on local service, based on visits and recommendations from friends and other users.

As we discuss this major change in Web use we should also consider this thing called the local search. In simple terms, being #1 worldwide will mean less if a viewer limits the search (and use) to fewer sites. For example, businesses of all types may start to limit their marketing to sectors such as Google Places or to Hotpot (interesting name). This last item is a ratings tool as well as a recommendation engine for Google Places. It’s designed to focus on local service, based on visits and recommendations from friends and other users.

Will search-engine optimization maintain its place as a key to Web site success? The answer could be “yes” and “no” together. Relevant, reliable content that is useful on a consistent basis will still put sites near the top of the priority list. One good example for incorporating the speed idea into the mix is using WebP images that will load faster than bulky JPEGs.

Here are some reasons why we should start using WebP:

Perhaps one of the most important ideas Webmasters, developers and marketers can take from this discussion comes from Anderson and Wolff, authors of the Wired article. “The fact that it’s easier for companies to make money on these platforms only cements the trend. Producers and consumers agree: The Web is not the culmination of the digital revolution.”

A Few More Thoughts

Listing the key items for WebP use and including the emphasis on making money makes the argument for page speed quite strong. However, WebP won’t be an industry-wide standard immediately. Most people will continue to use JPEG or another popular format in the near future. But designers and administrators should give serious thought to using thumbnails to reduce load time.

As for the idea of making money on the Web, we might go back to the old real estate mantra “location, location, location.” Make sure your Web content is placed properly and the page-to-page connections are efficient. Add the local ingredient to your Web marketing to secure your place in a defined area.

Here’s a sobering thought for the doubters out there. The Wired article points out that a 1997 cover story in that magazine was already urging readers to “kiss your browser goodbye.” The emphasis for the future, according to this theory, was going to be on applications for specific purposes and a Web that was getting closer to “out of control.”

Walled gardens in which users were controlled by the provider certainly didn’t prove to be the success model. Google seemed to have “it!” But now it seems that even this game-changing business model wasn’t the final answer. Stay tuned.

A traditional sitemap is a rather simple and efficient way for a Web site visitor to find a specific page or section on a complex Web site. It’s best to start with a home-page link then offer a list of links to main sections of the site. Those sections can offer details to pages. Be absolutely sure the links are accurate and take the visitor to the exact location described.

I n addition, it’s generally best to provide one page (sitemap) where search engines can find access to all pages. A search engine sitemap is a tool that the Web designer or administrator uses to direct search-engine spiders based on frequency and order within the Web site.

n addition, it’s generally best to provide one page (sitemap) where search engines can find access to all pages. A search engine sitemap is a tool that the Web designer or administrator uses to direct search-engine spiders based on frequency and order within the Web site.

So, when we see the term “sitemap” we are fairly sure we know what we are dealing with. The term is very descriptive of the job that this online tool is designed to do. But what about sitemaps for search engines? How do they work? In what specific ways can sitemaps help both developers and users?

Ready-Made Sitemaps for Search Engines

Several companies offer online tools that will “spider” the Web site and create a search-engine sitemap. Some of these services will work with hundreds of pages without charge. If you require the spider to work with more than 500 pages you will have to pay a basic fee. Most of these tools come with instructions on how to apply the sitemap to your site. Once the sitemap is in place you will need to submit it to search engines that will “spider” it.

This gets us to the point at which we have established a multiple-sitemap strategy. Our first, traditional sitemap is created so that visitors to your Web site have a good resource for finding their way to your content. The traditional sitemap comes with an added benefit: keyword weight to the linked pages.

Our strategy doesn’t stop there, however. The search engine sitemap lets the most-used search engines know which pages should be part of their databases. Organization and frequency are the keywords here!

Video Sitemap

We know the standard sitemap will help visitors find their way to the quality content on your site and the search engine sitemap is the key to an efficient and successful sitemap. But you may want to consider additional assistance for visitors that uses video?

When your well-designed site includes links to video players, links to other video content associated with your site and, of course, video on your own pages, you need to make sure that the major search engines (and visitors) can find what they want and need. A search engine bot will only find your video if you include all the necessary information: title and description; URL for the page on which the video will play; URL for a sample or thumbnail; other video locations, such as raw video, source video etc.

You shouldn’t be too concerned about how much space you’re taking up with video sitemaps because many tools and programs that help you establish video sitemaps will handle thousands of videos. Keep in mind that there will be limits such as a compressed limit expressed in megabytes.

We’ll use Google as an example of formats that will work for video crawling: mpg, .mpeg, .mp4, .m4v, .mov, .wmv, .asf, .avi, .ra, .ram, .rm, .flv, .swf. All files must be accessible through HTTP.

Mobile Sitemap

No, the sitemap doesn’t move! This Web site guide includes a tag and namespace language specific to its purpose. The core of the mobile sitemap is standard protocol but if you create yours with a dedicated tool make sure you can create mobile sitemaps.

This guide uses only URL language that focuses on mobile Web content. When the search engine crawls here it will not pay attention to non-mobile content. In a multiple-sitemap strategy you will need to create a sitemap for non-mobile locations. Make sure you include the <mobile:mobile/> tag!!

See a sample of a mobile sitemap for Google at:

http://www.google.com/mobilesitemap.xml or check other search engines to see what you need to do to make this work as it should. Submit mobile sitemaps as you would others.

Geography and Sitemaps

As with other targeted sitemaps, the subject of targeting certain countries with your sites can consume much more time and space than we have here. But here are some of the basics to get us started.

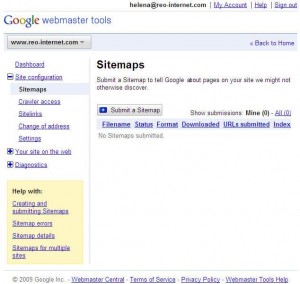

You can target specific countries when you set up your plan with services such as Google Web Tools. This is accomplished when you submit an XML sitemap. The bottom line is that you can submit multiple sitemaps on one domain.

User experience shows that Web site rankings increase by 25 percent or more for organic traffic. With careful SEO effort on each of the language versions the results could be even more impressive.

Submit and Grow

To wrap up this very brief look at the subject of sitemaps, let’s consider a few of the top places for submitting your sitemaps. Keep in mind that search-engine technology has changed and improved so you will need to be familiar with the standards and requirements to be successful.

To wrap up this very brief look at the subject of sitemaps, let’s consider a few of the top places for submitting your sitemaps. Keep in mind that search-engine technology has changed and improved so you will need to be familiar with the standards and requirements to be successful.

Don’t limit yourself to the idea of submitting one URL and having the crawler do the work. This isn’t the most efficient way to get where you want to go. Devote some time to learning about submitting to Google, Bing, Ask.com, Moreover, Yahoo Site Explorer and Live Webmaster Center among others. These seem to be the top destinations in the opinion of most industry observers.

The process of submitting a sitemap differs slightly from one place to another but in general terms you use the “configuration” link and choose “sitemaps.” You’ll need to enter your complete path description in the space provided. Google’s example might look like this- http://www.example.com/sitemap.xml, type sitemap.xml) and choose “Submit.”

You should get confirmation and even a screen shot to show what you have accomplished.

Good luck!

Regular observers of Google and the world of Internet searching call Google Instant Search an aggressive attempt to define the way people use the Internet. The year 2010 may indeed be remembered by some as the year in which this Web-use behemoth redefined searches.

Is this a truly fundamental change? Earlier this year an article in one of the leading technology magazines heralded the end of the Internet as we know it. That article stated the belief that future Internet use will be about applications rather than about searching and finding. This basic change would takes users from spending time on the search process to arriving and beginning to use the outcome much more quickly.

Is this a truly fundamental change? Earlier this year an article in one of the leading technology magazines heralded the end of the Internet as we know it. That article stated the belief that future Internet use will be about applications rather than about searching and finding. This basic change would takes users from spending time on the search process to arriving and beginning to use the outcome much more quickly.

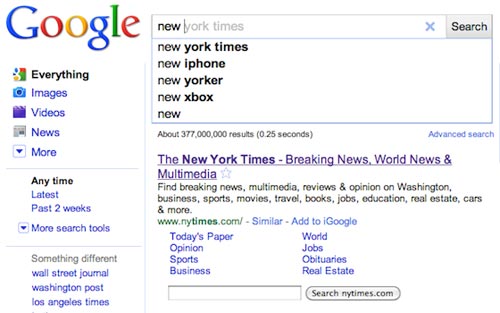

But does Google Instant Search refine the use of the World Wide Web so completely? Do we, as users, actually arrive at applications instantly? Let’s see what veteran Google-watcher Tom Krazit has to say about this. Keep in mind that Krazit has followed Google on a consistent basis because he considers it “the most prominent company on the Internet.”

Some of the key points Krazit makes about this new search technology are:

This last factor may be the most important one of all. In the minds of millions of Internet users the word Google is a verb. We don’t “search” the World Wide Web for information. We “google” it. This is a key point made by Krazit and others. Krazit sees Google Instant Search as “a combination of front-end user interface design and the back-end work needed to process results for the suggested queries on the fly.” This is quite different from what other major Internet players have done or will do.

It’s Still Great, Right?

The benefits of Google Instant Search may seem obvious but there are some search aficionados who have questions and concerns about the idea. The technology makes predictions and provides suggestions as to what a might be searching for. These features have cut typing and search time by 2 to 5 seconds, according to Google.

That might seem like great stuff but not for everyone is completely sold. Some people still want to turn this feature off and this can be done through the “Search Settings” link. The company states that this doesn’t slow down the Internet process and adds that the experienced user will welcome the efficiency of Google Instant Search, especially because it doesn’t affect the ranking of search results.

As quick and efficient as Internet searches already were (even a few years ago) Google Instant Search came along as an effort to make the process move more quickly. In its simplest terms, this technology is designed to take viewers to the desired content before they finish typing the search term or keyword. Individuals certainly don’t have to go all the way to clicking on the “Search” button or pressing the “Enter” key.

Logical Extension

Rob Pegoraro, writing for The Washington Post, states that Google Instant Search is the “next logical extension” of what Google calls the “auto-complete” feature. The journalist does comment, correctly, on our “collective attention span online” and notes that the need for and use of instant-searching tools seems a bit frightening.

Rob Pegoraro, writing for The Washington Post, states that Google Instant Search is the “next logical extension” of what Google calls the “auto-complete” feature. The journalist does comment, correctly, on our “collective attention span online” and notes that the need for and use of instant-searching tools seems a bit frightening.

One thing parents and Web do-gooders won’t have to worry about is the appearance of offensive words and links to obscene destinations. Google has provided filtering capability that will prevent the display of the most common “bad” words, for example.

Pegoraro also expressed the hope that saving 2 to 5 seconds per search doesn’t become “Instant’s primary selling point.” Instant Search continues one of the features of earlier search tools in that it tailors things to the location of the user who has signed in. This certainly sped up the process without Instant Search so it should do the same and more now.

Making Connections

In 2009 a fellow named Jeff Jarvis wrote a book about Google that put the Internet giant in a special place among the Web-users of 21st century. His book carried the title “What Would Google Do?” If this title gives the impression that one Internet company is guiding the online world it’s because Google does this.

A key issue in Jarvis’ book is the connection made between users or between users and providers. Google Instant Search brings the provider/user connection to the conversation level. In a way, this technology mimics how we actually converse with friends and family members. As we construct a sentence and speak it, the listener is already forming thoughts about our subject or point.

Google Instant Search does this and we respond quickly with adjustments and new information. The search tool forms its “opinions” and makes suggestions again. It’s sort of like that annoying guy who finishes our sentences for us and this may be a problem for many long-time Internet fanatics.

With all of this in mind, we should understand that Google’s management team and developers spent a lot of time testing this technology before introducing it. Yet, it isn’t perfect by any means. Slower Internet connections may struggle with Instant Search. As mentioned earlier, the change has caused some concern among those who make their living with search-engine optimization.

One more question: Is Google Instant Search perfect for mobile use? We’ll find out over the next few months.

In order to understand the rise of paid content, it’s necessary to understand the meaning of the nofollow tag and how it is used (and some would say abused) by large sites like Twitter.

The nofollow tag is used to tell some search engines (*cough*Google*cough*) that a hyperlink should not influence the link target’s search engine ranking. It was originally intended to reduce the effectiveness of search engine spam. Spam comments were the nofollow tag’s original targets: spam comments on blogs were used to get back links and try to squeeze a few drops of link juice from as many places as possible. By making comment links nofollow, the webmaster is in effect saying, “I am in no way vouching for the quality of the place this link goes. Don’t give them any of my link juice. Maybe it’s a good site, but I’m not taking chances.”

Nofollow links are not meant for preventing stuff from being indexed or for blocking access. The ways to do this are by using the robots.txt file for blocking access, and using on-page meta elements that specify on a page by page basis what a search engine crawler should (or should not) do with the content that’s on the crawled page.

Nofollow was born in 2005, and since that time, in the SEO arms race between the search engines and those who want to game them, websites started selectively using the nofollow tag to “sculpt” page rank for pages within their own site. In other words, a link going to an internal page that was ticking over nicely could be made into a nofollow link in an attempt to “conserve” PageRank juice to give to another internal page that was just starting out, or struggling, and needed some help.

Well, Google frowns on this, insisting that you’re better off in the long run to use links to your site’s pages but not to selectively use the nofollow tag in an attempt to juice up the pages you think need a boost. According to Matt Cutts, the only time you should use nofollow is when you cannot or don’t want to vouch for the content of a site. An example would be a link added by an outside user (say, in a comment thread) that you don’t trust. Cutts suggested that unknown users leaving links on your guestbook page should automatically have their links nofollowed.

Right, so what does this have to do with paid content?

Paid content companies take advantage of Google’s emphasis on domain authority, by buying up trusted sites like eHow (purchased by the seemingly insatiable Demand Media) and dumping lots and lots of esoteric content into it. Why do they do this? They get the domain authority, and the esoteric content helps ensure that when someone, somewhere searches for an article on, say, how to make a butterfly shaped cake, the content that they paid a content writer a couple of bucks for will show up at the top of the search engine results. In other words, they’re targeting the proverbial “long tail.”

How do these sites know what content to buy? They have algorithms that comb through keywords and keyword combinations and determine where there are gaps in information. Then the content buyers commission writers to write content specifically to fill those gaps. You may have heard the statistic that 20 to 25% of queries on Google have never been searched before. That’s a huge, huge number of queries. The more of those queries you can anticipate and answer, the more hits your site will get over the long term.

While link spam and comment spam were clear attempts at short term efforts for sites to claw their way to the top of the search engine rankings, and were relatively easy to squash using nofollow tags, paid content is more of a long term strategy, and it’s not clear what, if anything Google can do about it.

What seems to be happening is that sites like Twitter are kneeling down before their Google overlords (as one side of the story goes) and automatically making even the most harmless links (such as your own link to your own website on the “Bio” part of your Twitter profile) nofollow links. That has seriously ticked off a lot of long term Twitter users who legitimately poured in lots of very real, original content and can now no longer get any link love from that Bio link, even though it’s from them, to their very own site. When this happened, the metaphors about Google and Twitter ran rampant: “throwing the baby out with the bathwater,” “shutting the barn door after the horse gets out,” “cutting off its nose to spite its face,” etc.

The strategy seems to be that if nofollow links are being used as they were intended (well, as Google intended anyway), sites that are all promotion and no content would have a harder way getting to the top of the search engine results pages. Google’s fear is that paid content will game the system when it comes to odd or unusual searches, and the person who really does devote his life to making the world’s best butterfly-shaped cakes will lose out to the paid content sites who had writers or videographers hack together a 5-step instructional page or video.

Whether it will work or not is yet to be seen. As for now, paid content sites are doing pretty well for themselves. And the search engines that cater to them, like Ask.com, which wraps a few “real” sites in with sponsored results, are doing pretty well too. From February 2010 to March 2010, Ask.com’s share of search engine traffic went from 2.84% to 3.44%, while the traffic for the other (and admittedly much larger) search engines stayed relatively flat. Have a look at the screen shot of Ask.com’s results for “How do I bake a butterfly shaped cake” to see for yourself the influence of paid content on this search engine.

Most of us have noticed the new and improved Google search results pages by now. The biggest thing (in my opinion) is the new left hand column you get when you click the new “Show Options” link at the top of the results page. Whereas before if you wanted to search from a certain time frame, or make other refinements to the search, you had to go to the Advanced Search options page, now you can do a lot of those refinements right from your original results page.

The fundamentals of SEO apply regardless of which search engine you want to rank in, but each of the major ones has some finer points, so let’s get right to them.

You may have heard that participating in Google AdWords would penalize your site in other search engines, but Yahoo! insists that this is not the case. They really have nothing to gain by dropping sites that are relevant, and if they did, they’d be cutting off their nose to spite their face. But the age of your domain is important because a longer track record ups a site’s relevancy. It’s only one score, and it’s one you can’t do much about, but it’s something to keep in mind: SEO is a long term idea.

Yahoo! suggests registering domains for more than one year at a time. It gives your site a long-term focus and keeps you from accidentally losing a domain because you didn’t find the email that it was time to renew. They also suggest you should buy the same domain name with and without dashes. This will keep you from losing so-called type-in traffic. However, all SEO pundits say that the more dashes, the harder it is to type in, and the more spam-like it will look, so don’t get carried away.

Like with Google, with Yahoo!, relevant inbound links from high quality pages are gold. This is one of the hardest parts of SEO, but it’s one where there really aren’t many shortcuts. You want links from sites that belong to the same general neighborhood of topics as yours. If you have a site that sells organic flour, a link from a fishing tackle site isn’t going to help you much, if at all. And when it comes to giving out your own links, be careful here as well. If you link to a lot of sites that are or could be penalized, you could be hurting yourself by association. If you sell text links, check out every site that buys from you to make sure you’re not endorsing spam, porn, or other content that search engines frown upon.

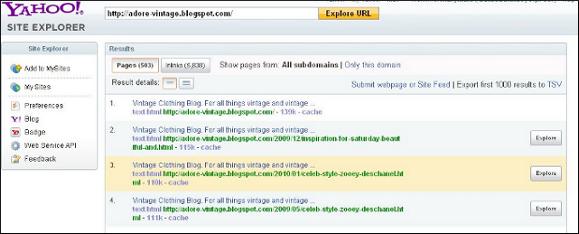

Make use of Yahoo Site Explorer to see how many pages are indexed and to track the inbound links to your site. The first screen shot shows the results of the analysis of one site. As you can see, there are 503 pages on the site, and 5,838 inlinks, each of which you can explore further. To maximize crawling of your site and indexing of pages, publishing fresh, high quality content is the key.

There have been some case studies about what Bing looks at compared to Yahoo! and Google when ranking sites. When ranking for a keyword phrase, both Bing and Google look at the title tag 100% of the time. Prominence is given a little more weight with Bing than with Google, while Google favors link density and link prominence more than Bing. Bing evaluates H1 tags, while Google does not, and Google considers meta keywords and description while Bing does not. What that all boils down to with Bing is that having an older domain and having inbound links from sites that include the primary keyword in their title tags (another way of saying relevant inbound links) are keys to optimizing for Bing. Like optimizing for the other major search engines, link building should be a regular, steady part of your SEO effort.

With Bing, it’s easier to compete for broad terms. With Bing, keyword searches result in Quick Tabs that offer variations on the parent keyword. This has the effect of bringing to the surface websites that rank for those keyword combinations. The goal is for content-rich sites to convert better than sites with less relevant content. The multi-threaded SERP design brings up more pages associated with the primary keywords than would come up with a single-thread SERP list. Also, Bing takes away duplicate results from categorized result lists. This allows lower ranked pages to be shown in the categorized results.

The Bing screen shot shows the results for a search on “video cameras.” To the left is a column of subcategories. Results from those subcategories are listed below the main search results. While there are some differences to SEO for Bing, the relatively new search engine isn’t a game changer when it comes to SEO.

It sometimes seems as if SEO is synonymous with “SEO for Google,” since Google is the top search engine. And it also seems that when it comes to SEO for Google, a lot of the conventional wisdom has to do with not displeasing the Google search engine gods by doing things like cloaking, buying links, etc.

The positive steps toward SEO with Google include keywords in content and in tags, good inbound links, good outbound links (to a lesser extent), site age, and top level domain (with .gov, .edu, and .org getting the most props). Negative factors included all-image or Flash content, affiliate sites with little content, keyword stuffing, and stealing content from other sites. It isn’t so much that Google wants to seek out an destroy sites that buy links, but they want the sites with actual relevant, fresh content to have a shot at the top, and with some sites trying to game the system and get there dishonestly, Google has to find a way to deal with these sites without hurting the good sites.

In fact, Google wants users to report sites that are trying to cheat to get to the top of the search engines. On the screen shot, you can see a copy of the form found at https://www.google.com/webmasters/tools/spamreport?pli=1 for reporting deceptive practices. You have to be signed in to your Google account to use this, by the way. They want to get away from anonymous spam reports.

If you’ve read this blog for any length of time, you might be scratching your head and saying, “What?”

We’ve come down hard on link exchanges for the purpose of building your Google PageRank or search engine standing, and there’s good reason for it. It started out as a way to cheat to get to the top. You give some links, and you get some back. It sounds harmless, and when done “organically” it’s not just harmless, it’s a great way to boost your position in the search engine rank pages (SERPs). Say you run a blog about motorcycle gear and you’ve been at it for awhile, so you’ve built up some history. You might find some of the site you wish would link to you (but that may never know you exist) and simply ask if they would link to your site. Quite often the answer is “yes.” Most legitimate websites have enough good will that they’ll give a promising newcomer a little help.

However, in between the link farms, which are created solely to increase back links regardless of relevancy or reputation, and doing it the old fashioned way by asking sites to link to you, there are some programs that walk a middle path. They may have a legitimate website where site owners can categorize their site and find other sites that are about the same (or nearly the same) topic. For example, such a site might have a category for cooking blogs and another category for political blogs, another for sites on antique book appraising, and a bunch more categories.

The idea is that each day you’ll go to your category or one that’s closely related, look at several sites, and click a button that gives that site some link love by placing a link to it. And every day a bunch of other sites in your niche will do the same thing and hopefully leave you some back links in exchange.

Is this “cheating”? Will search engines penalize you for this?

It’s hard to say.

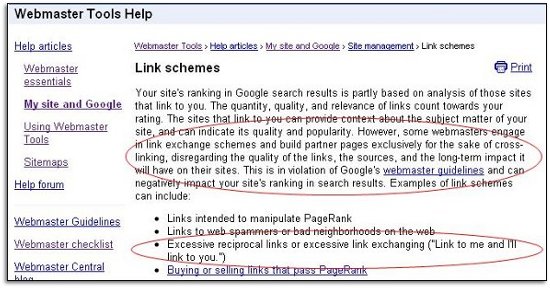

In the screen shot you can see part of Google’s Webmaster guidelines that kind of / sort of address this. Clearly, exchanging links for the sake of links regardless of relevancy or quality is not good. Google will penalize your site for this.

On the other hand, it says that “link schemes” can damage your standing and include among “link schemes”

Excessive reciprocal links or excessive link exchanging (“Link to me and I’ll link to you.”)

The important word in that statement is “excessive.” How do you define “excessive”?

One way that link exchanges may seem excessive has less to do with their sheer numbers and more to do with their relevancy. If you run a site about organic gardening and exchange links with a couple of, ahem, “adult entertainment” blogs, you’re doing much more harm than good.

If, however, you participate in a link exchange with a relevant category and you find a few sites that you wouldn’t have found otherwise, that are good fits for your site as far as relevancy and quality, then there’s no real problem. If you had found those sites organically, you probably would have asked for links from them anyway. You just had a little help finding them.

Look at it this way: If Google gave no influence to back links, either good or bad, or if Google didn’t exist, would you link to those sites and ask for links to yours? Or alternatively, would you link to those sites even if you had no idea if they would link to you? If the answer is yes, then you’re probably OK.

If you run a site and you have, say three hours a day you devote to research and / or link building (I wish!) then you probably shouldn’t devote more than one of those hours to participating in a targeted link exchange. Spend the other two working on other off-page optimization like searching out new sites to evaluate and possibly ask for back links. That way you won’t risk an explosion in link numbers that would tip off the search engine gods and make them think you’re up to some illegitimate link-swapping.

When you find a relevant site that you really like, and you read it and are convinced it would be a good back link to have, asking directly for that back link means a lot more than the three or four links you might get on an “I’ll link to you if you link to me” site. For one thing, it’s great to get that vote of confidence when someone likes your site enough to ask for a link. The first time some cool site contacts you to ask for a back link is a milestone of sorts.

And another thing to consider is this: how many high quality sites do you know of that have link exchange “badges” – particularly above the fold – that indicate their participation in link exchange sites? Not many, I’d bet. While they’re not exactly signs of desperation, they show exactly what you’re up to, and indicate that maybe you can’t get back links any other way. Fair? Of course not. But that’s the reality you have to deal with.

Link farms and paid link exchanges really will harm your site, no question. Targeted link exchange programs where you give and ask for links based on relevancy and perceived quality can be OK, as long as you don’t depend “excessively” on these sites for links.

Some people have the idea that the best domain name is the most generic, but that’s not always the case. In most cases, a domain name tied to your brand is better. Think about it. If you’re searching for a certain brand of organic pet food, a domain name matching that brand is going to be more intuitive to you than simply organicpetfood.com (which, as of this writing, is available). The name that you use to advertise your products is almost always the best name for your domain. People who don’t search will try this first in their browser, and if it matches, they’ll almost certainly remember it.

Link building is still one of the most important factors in your search engine results page (SERP) rankings and in your Google PageRank and will be from the foreseeable future. Links are still the fundamental connectors on the web, and legitimate links are still a great way to judge importance of a site and just how trustworthy a site is. Google’s search algorithm has learned to devalue purchased or traded links and emphasizing trusted, genuine links. And when those links are further bolstered by the vintage of the domain, user data, and other factors that are hard to fake, that’s when SERP rankings rise.

Here are 15 great ways to build up the kind of links that will increase your PageRank and your SERP position.

Link to sites in the news in your niche. To find them, do a Google news search on your niche or keywords, as you can see in the screen shot for news searches on 401k investing.

Link to sites in the news in your niche. To find them, do a Google news search on your niche or keywords, as you can see in the screen shot for news searches on 401k investing.And for good measure, here’s another link: 5 really bad ways to get links.

You can’t get away from link building when it comes to getting traffic, moving upward in the SERP listings and increasing your PageRank. But don’t try to take the easy way out. The work you put in getting links legitimately will be well worth it over the long term, while ill-gotten links will sabotage your efforts very quickly.

Statistics from early 2009 claimed that iPhone users accounted for some two-thirds of all mobile browsers. Now, while that particular statistic has been questioned and debated, there is little doubt about the effect of the iPhone on mobile browsing. The advent of the Google Android phone will only make mobile web surfing more mainstream than it already is. When it comes to optimizing your web content for Google Mobile Search, there are a number of things you can do.

Some people say that you should make a mobile version of your regular website, while other say that you should optimize your existing site for mobile browsing. But whether it’s your normal site optimized for mobile, or a new mobile version of your site, there are steps that anyone wanting to rank high in mobile search results should do.

Step one is to make sure that your website is mobile compliant. This means that your pages are formatted for people browsing the web with their phones. Mobile browsing implies a lack of time to complete a search. Perhaps instead of mobile “browsing,” the term should be something more like mobile “hunting.” But since mobile users are also short on screen space, the pages should be designed to cater to this reality. Do you know what your site looks like on a mobile web platform? If not, do a mobile search to see. You’ll notice that a lot of your site’s goodies are unavailable. But this makes some choices all the more obvious.

Step one is to make sure that your website is mobile compliant. This means that your pages are formatted for people browsing the web with their phones. Mobile browsing implies a lack of time to complete a search. Perhaps instead of mobile “browsing,” the term should be something more like mobile “hunting.” But since mobile users are also short on screen space, the pages should be designed to cater to this reality. Do you know what your site looks like on a mobile web platform? If not, do a mobile search to see. You’ll notice that a lot of your site’s goodies are unavailable. But this makes some choices all the more obvious.

If these things don’t check out, then you need to make some changes to get your site ready for what many experts see as the coming tsunami of mobile searching.

Why should you go to the trouble? The current industries seeing the most growth in mobile searches are business, entertainment, and travel. According to the Mobile Optimization Association, mobile searchers tend to be young, high income professionals with promising careers, or, to put it more bluntly, people with more disposable income than usual.

Web pages for mobile browsing are created in XHTML or WML. They must be W3C compliant. W3C stands for “World Wide Web Consortium” and sets guidelines about how a web page should be structured. They publish best practice design principles for webmasters. Without going into the nuts and bolts of getting your site to be W3C compliant, you should know that there is a W3C Code Validator at http://validator.w3.org/ that anyone can use to ensure that your site renders on all the major browsers and platforms in a similar manner.

Before deciding exactly how to optimize your site for mobile, there are a few things to know about the people who use mobile browsers. First of all, they tend to use the same search engine on their mobile device as they use on their PC. Even though there are 234 million wireless subscribers in the U.S., only 10% use mobile search (which is still 23 million people). Adoption rates of mobile browsing are much higher in Europe. Finally, mobile searchers are goal-oriented: they want to get the info and get out. They don’t tend to browse or surf.

A few general practices for optimizing your site are:

There are two main opinions when it comes to optimizing your site for Google Mobile: 1) Optimize your existing site; and 2) Create a new mobile-only site. Both approaches have their advantages and disadvantages, which we’ll go into next.

If you want to optimize your existing site, make sure that in mobile search your pages are independent of device. Search results on mobile devices use a different data set than web browsers do.

Use external CSS style sheets because they limit how much code has to be downloaded and are helpful in scaling up or down for different screen sizes. You can have a separate style sheet for hand held devices.

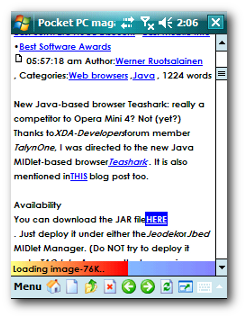

Use text links rather than images. Images may not download at all and will increase page loading times. Here are a few do’s and don’ts for optimizing an existing site for mobile search.

The argument for doing this is that mobile users are goal oriented rather than simply browsing. Some sites have taken to using a subdomain approach rather than using a separate domain like .mobi. That would give your mobile site a name like mobile.yourdomainname.com. It allows you to retain the “brand” of the top level domain rather than having to rebuild branding for a new domain name. Here are some tips for optimizing a mobile-only version of your site.