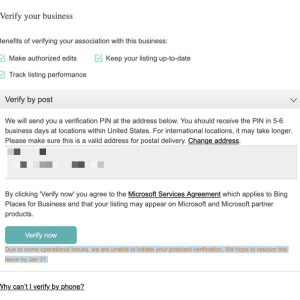

Microsoft’s Bing Places for Business has been broken for months, with no fix in sight. The verification page still displays the message: “Due to some operational issues, we are unable to initiate your postcard verification. We hope to resolve this issue by Jan 31.”

Originally, this deadline kept getting pushed further down the calendar. Now, they’ve simply stopped updating it—leaving businesses stuck, unable to verify their listings, and the message outdated by over a week.

Seriously, Microsoft—you’re a $3.5 trillion company, yet you can’t manage to fix a basic function that allows local businesses to appear on your map? Instead, you’re too busy pushing AI into every product while neglecting essential services. Maybe focus on making Bing Places actually work before expanding into the next big thing. This is beyond ridiculous.

While several companies established a Fairsearch.org group in order to try and prevent the Google-ITA deal, claiming that it is a yet another step of monopoly (by Google that is) of the airline ticket market, Bing has decided not to complain, but to fight. In addition to purchasing a predictive engine for flight costs, the Farecast, about a year ago, they now team up with one of the popular travel search engines, the KAYAK. It seems that the deal is beneficial for both sides – after all, KAYAK is probably also worried about Google acquiring ITA, despite their talks about “welcoming Google as a competitor”.

It their announcement, Bing flatters KAYAK, calling its new partner “a leading innovator in travel search”, and talks about “more comprehensive travel search experience”. The deal should benefit those people who want to plan and book via Bing. Although this looks like trying to stop people from leaving Bing, the move is actually a counter-step to Google entering the travel search. Of course, although “Google is not wining every niche it enters” as said KAYAK CTO and co-founder Paul English, it can affect the market heavily.

So, there is nothing left but to wish good luck to both Bing and KAYAK in their struggle.

According to latest StatCounter data, Goolge has dropped below 90% of search engine market share – for the first time since July 2009. The presented figure of 89.94%, though is still a major headache for its competitors, Yahoo and Bing that combine to just over 8% of global search… In the European market the domination is even greater – Google has about 94% of market share.

Although Bing has surpassed Yahoo globally in January, in the US market Yahoo! is still a number two search engine, with 9.74% share compared to Bing’s 9.03%. Google has dropped below 80% once again, with 79.63%.

In Asia, Baidu has once again beaten Bing for the number three spot (Yahoo! is second). It must be noted however, that StatCounter only considers English searches so the results have to be viewed with care. For example, in Russia Google is reported as the market leader with 52% with Yandex having a figure of 46%, and in Czech Republic the picture looks even brighter for Google, which beats local Seznam 79% to 19%. Of course, when native language searches are considered, both Yandex and Seznam are more popular than Google in their local market.

But even so, in China, Baidu is a clear number one, with almost 70% of the market (compared to Google’s 29%) and in South Korea Naver is back to absolute majority (55.15%), with both Google and recently launched Daum both loosing ground (31.7% and 7.85% respectively).

Woke up this morning thinking further about my statement yesterday that Microsoft should by Twitter. I really think that if Google does not buy Twitter and it lands in the hands of Microsoft, it could potentially become a great equalizer. Bing’s real time search results would be exclusive and therefore at the very least very different from Google. Bing needs to do something, it is sort of floundering as many companies do when they are not really committed to being the best.

On the other hand if Facebook buys Twitter, Google has a much bigger problem, potential elimination from real time search. Facebook is the number one visited website in the world. Now this is great, but their problem is, their visitors are not interested in buying anything, they do not click on ads, they do not convert into $$, and this is becoming a problem for the future of Facebook. It is sort of the old school internet business model on steroids: build it,make it cool and free, get traffic, and with traffic all your problems will be solved. Now if your roll Twitter into Facebook, you do not get any better profit generation, but now you hold all the cards in real time search. Facebook could place extraordinary value on this real time data, and begin to charge search engines massive amounts of fees to access their websites and data. If the search engines do not agree to pay these outrageous fees, then Facebook can begin to build their own search engine. Even if their algorithm was not very robust to begin, with having the real time data from Facebook and Twitter would insure that they provide phenomenal real time information (that would not be found anywhere else) and can use this real-time data VERY effectively. It is a fact that no one is really Tweeting or Facebooking about the spamming Viagra website they found on page one of Google, nor the insurance website they found in BING. Therefore Facebook would be able to quickly put a serious reduction on spam, create a place in search, and provide themselves with very bright future for profitability and a serious chunk of what Google and BING currently have.

As an internet marketing professional, I really do not care who does what. I do not own the game, just play by the rules set forth by people far smarter and wealthier than I. I must say though, I really like Twitter in the hands of Facebook or Microsoft. Lets see what Google is really made of….

Bringing the most relevant results to the user is the quest of every search engine. Fighting spam is one aspect of this issue. The other one is personalization – showing the results that would be the most interesting to the SPECIFIC searcher. Hence the localization, hence the search history….

Bing has recently followed Google on that path, applying city-based localization to the query results in the US. It will now give additional weight to local businesses, especially service providers. This is another step forward, as the local Bing searches in various countries are showing different results for quite a while already. However, for big countries, such as the States this might be not enough – so additional refinement is now applied, based on the city you are in. It must be noted, the results are not entirely different – it is just that local businesses are given some “extra points” by the search algorithm.

Another aspect is using your past search queries in the results. The Bing (as does Google for some time already) tries to “learn your preferences” based on the searches you conduct and the results you pick from the presented list. Those will be stored in search history and shown more frequently (or higher) in the result list when similar query is submitted.

It seems that “those who bought this also liked that” feature, used by many online stores and other websites is now entering the SE world.

I was in the shower this morning, considering the impact that Twitter and Facebook have had on Google’s search results. After reading Rands test results from Twitter links versus traditional text links in ranking pages within the Google search results, it is clear that Google is placing significant weight on links from Twitter and Facebook. Based upon this information, one would assume that if Facebook and Twitter no longer permitted Google-bot access their websites, Google’s algorithm would have to be seriously adjusted. It would probably end up in pushing Google search results to displaying only yesterday’s news and information, instead of real-time search results currently based upon the linking patterns Google-bot gets from Twitter and Facebook.

I was just reading a blog post on searchengineland about Twitter being acquired by someone, whether it be Google, Microsoft or Facebook. I find this concept interesting: Whomever buys Twitter will have the most updated real-time content online. I believe that the acquisition of Twitter must be made by Microsoft. This would give Microsoft its first leg up on Google in search. Microsoft could probably license the access to Twitter to Google for hundreds of millions of dollars.

The facts are quite simple that without Twitter and Facebook links, Google’s sort of screwed. Unfortunately Google’s recent behavior has created a bit of industry anger towards its online business practices. I think this is why Groupon did not sell to Google.

I wanted to mention that this blog post was written with the assistance of NaturallySpeaking by Dragon. If you have hesitated in using speech-recognition software, I would say now is the time to give it a try. I think that using this software will make it much easier for me to blog from this point forward.

While I’m getting NaturallySpeaking a plug, I may as well mention the really really cool viral marketing tool they’ve built on their website. It’s called Fingers of Fire.

I am really getting tired of Google presenting information and blog posts from 2007. The authority Google gives to these old blog posts and news items causes their results for particular topics to just STINK.

So I jump search engines to BING or for today trying Blekko. Both these engines tend to do a better job weeding out some old content from their results which is great. But…..and this needs to be BUT….

What is with the results in BING and BLEKKO showing websites from every English speaking country? A search on Blekko for “promotional mugs” presents results from all over the world, and although not quite as bad the same thing happens with BING.

http://blekko.com/ws/promotional+mugs

http://www.bing.com/search?q=promotional+mugs&go=&form=QBRE&qs=n&sk=&sc=8-16

Which search engineers decided that it is a good idea to present these international results to a US search query? It seems to me that this is the most basic part of a relevancy algorithm.

I can provide free tips to the engineers at Blekko and Bing:

1.) if the domain ends in .co.uk these results should be provided to people searching in the United Kingdom.

2.) if the domain ends in .com.au these results should be provided to people searching in Australia.

3.) if my IP address is based in the United States, please only show me websites whose IP address is in the US. (Take this same theory and apply it to whatever country the search query originates from).

It is really sad when in general the entire internet community is looking for alternatives to Google, and this is the best competition we can come up with?

No wonder Google is taking over…..

Ask.com and Bing are very anxious to prove the world they can beat Google. Even in minor things, like Image Search that Bing was enhancing constantly over last several month. Or in a Search Engine Jeopardy contest, managed by Stephen Wolfram. Well, it seems Google competitors still have some work to do, as the Search Industry leader was victorious once again.

The SE Jeopardy consisted of Jeopardy questions randomly selected form a database of around 200000 that were fed into the search queries of various engines. The developers then looked at the number of correct answers that appeared in the search results page and also at the number of correct answers that were included in the page that search engines presented as the top result.

The results were as follows:

Percentage of correct answers appearing somewhere on the first page: Google – 69%; Ask.com – 68%, Bing – 63%, Yandex – 62%, Blekko – 58%, Wikipedia – 23%.

Percentage of correct answers appearing in the top result of the page: Google – 66%; Bing – 65%, Yandex – 58%, Ask.com – 51%, Blekko – 40%, Wikipedia – 29%.

Obviously Wikipedia didn’t stand too much chance, as it was only one website competing against “the whole internet”. Still, it must be noted that only about one-third of Jeopardy answers are already in Wikipedia…

As to Search Engines – Google has beaten the competition, although the margins are not that big. But based on these results, Ask and Blekko have to do a better job of listing the most relevant link at the top (see how their percentage dropped when they looked into the first document. And Bing is “almost there” – but still a fraction behind Google.

Yandex numbers were very impressive, as it is basically a local Russian search engine. If the test has been done in Russian (or at least, based on Russian Jeoprdy Analogue, “Svoya Igra”, which includes fewer questions about American culture and history) Yandex would probably beat Google – exactly as it does in the Russian Search Engine market.

In summary, nobody can beat Google in providing relevant information. Not just yet. So, when you want to know “What is” something – don’t ask and don’t bing. Google it!

It seems that Bing decided to combat Google first of all in the Images section. Merely a month after introducing its Image Categorization Panel, Bing now applies a new look to the Bing Image Search page.

It is now showing the 20 top most searched images of the day – people, event, places, animals etc. Yesterday, the squirrel appreciation day brought “Cute Squirrel” to the top of the list. Today, the “National Geographic Photo Contest” occupies the number one Bing Image Search spot, with “Todd Palin” (6) beats “Sasha Obama” (10) and “Chelsea Clinton Wedding”.

Of course, once the search is done the top list disappears – but it can be easily retrieved via the “Browse top image searches” link just below the categorized search tabs bar.

The option is currently available in US, Australia and Canada Bing websites.

Everybody knows that Yahoo US has teamed up with Bing in order to fight Google in the North American search market. In other parts of the world, however, strange things are happening.

Since the start of 2011, Yahoo and Bing are also a joint force in Australia, Mexico and Brazil. In the UK, however, the deal has not been sealed yet. And although people are saying that it is only a matter of time, noticing that certain Yahoo search results look identical to Bing and speculating about “two different indexes”, it is yet to be seen whether Yahoo UK will be powered by Bing in the end. Why not, anyway? Where will Yahoo go? To Google? Well, yes!

Yahoo! Japan, for example, has made a partnership with Google. The deal (Google US will supply the technology for Yahoo! Japan) was recently approved by the FTC (Fair Trade Commission) – a body responsible for preventing monopolization of the markets. And although the ratification is not permanent, and FTC stated they will monitor the activity of the combined team closely, it was a major hit for both Microsoft and local search engines. Yahoo US was not very happy either, but was unable to stop the move, as it only own about 30% of Yahoo! Japan.