I am sure many people out there have tried to get included in Google News. We have been successful in getting multiple websites included in Google news. Recently we have been working fairly hard to get busy blogging. We decided to attempt to get SEO Moves included in Google news. Just for fun I am going to include the thread of conversation I have had with the people at Google News:

#1- Request Inclusion to Google News

#2- wait……

#3- Google News Replied:

“Hi john,

Thank you for your note.

We reviewed your site and are unable to include it in Google News at this time. We currently only include sites with news articles that provide timely reporting on recent events. This means we don’t include informational and how-to articles, classified ads, job postings, fictitious content, event announcements or advice columns.”

#4- SEO Moves Reply Back:

“Hi Google,

In looking at a search today for search engine optimization- the results include a bunch of posts from ZDnet (screen capture included). These posts are really along the lines of what we post, but written by one man who proclaims himself to “NOT” be an SEO expert?

I wish you would reconsider our inclusion as we are really giving people strong real time advice, and tips. 100% white hat information and we are working hard to help the small and medium size businesses succeed online.”

#5- Google News Reply:

“Hi John,

Thank you for your reply and for providing us with this additional information about your site. As we previously mentioned, the articles in Google News report on recent events. We currently don’t include informational and how-to articles, classified ads, fictitious content, job postings, event announcements, or advice columns.

Thanks for your interest in Google News.

Regards, The Google News Team”

Lets Take look at Google News Today for fun:

Using page speed as a primary factor in the search-engine process will only affect a tiny percentage of sites, according to information from Google. The company provides a number of ways to speed up sites. Webmasters should probably take look at these. code.google.com

The key is using filters that drive down to best performance practices on the pages. The module includes filters to optimize JavaScript, HTML and CSS style sheets, along with filters to optimize JPEG images and PNG images.

The key is using filters that drive down to best performance practices on the pages. The module includes filters to optimize JavaScript, HTML and CSS style sheets, along with filters to optimize JPEG images and PNG images.

When word first came out about mod-pagespeed there was a tendency to panic. Developers and Webmasters found that in addition to the dozens of factors affecting search-engine rankings Google was going to start using speed as a primary factor. The questions were:

Here’s the bottom line on mod_pagespeed: Webmasters and developers will use this to improve performance of Web pages. But there is some specific information these developers will need to know. This is open-source Apache software used to automatically optimize pages and content served with the Apache HTTP server.

Using mod_pagespeed in combination with the correct compression and caching steps should result in significant improvement in loading time.

Basic Steps

Before committing to the use of mod_pagespeed be sure you are working with Apache 2.2 since there aren’t any plans to support earlier versions. If you’re up to it you can develop a patch for these early versions.

Before committing to the use of mod_pagespeed be sure you are working with Apache 2.2 since there aren’t any plans to support earlier versions. If you’re up to it you can develop a patch for these early versions.

According to the best information available, mod_pagespeed can be downloaded as binary code for i386 and x86-64 bit systems through svn. Google instructions add: “It is tested with two flavors of Linux: CentOS and Ubuntu. The developer may try to use them with other Debian-based and RPM-based Linux distributions.”

Several filters have already been mentioned but Google puts special emphasis on “exciting experimental features such as CSS outlining.” This enhances the ability to draw around some page elements to help them stand out to the viewer. Developers and Webmasters can set outline color, width and style.

Achieving optimum page speed is the goal but Webmasters have to take compression, caching and order of download into consideration. It’s also important to reduce the number of trips back to earlier pages and to cut down the number of round trips from page to page.

As you learn more about this significant change in the function of search engines and the Web you may want to understand Page Speed, an open-source add-on for Firefox/Firebug. This is used to evaluate page performance and find ways to improve results.

This is an extension to Mozilla Firefox that runs in the Web development package. Running a performance analysis on a page brings the user a set of suggestions and rules to follow for improvement. Page Speed measures page-load time so that it presents the issue from the user’s viewpoint.

Rich Get Richer?

So, with this additional information about using page speed as a search-engine factor, should we all start to worry about the “big boys” crushing the smaller sites? As we mentioned in brief earlier, this was a cause for concern among Webmasters, developers and site owners. But a blog from Matt Cutts in April went a long way toward reducing the stress of this announcement.

So, with this additional information about using page speed as a search-engine factor, should we all start to worry about the “big boys” crushing the smaller sites? As we mentioned in brief earlier, this was a cause for concern among Webmasters, developers and site owners. But a blog from Matt Cutts in April went a long way toward reducing the stress of this announcement.

He literally thinks this is not that big a deal. His reasons include:

There are a lot of sources for learning about site speed, mod_pagespeed and other factors in this process. Google has devoted a section of their Web presence to the issue. See code.google.com As for the problems presented to small sites, Cutts and others believe that smaller sites will be able to react more quickly and would actually feel fewer negative effects.

Keep in mind that when we took a brief look at Page Speed above (the open-source tool) we mentioned that the emphasis is on load time. That is the amount of time that passes between a user request and the time when they are able to see the full page, with all graphics, images and text.

Some larger sites with complex designs may suffer if they can’t figure out a way to speed up their load time. Part of the answer may be in the move to larger hosting companies that can afford to put lightning-quick servers into operation.

But there’s still another issue with using page speed. The tendency among quality Web companies is to analyze every detail of operation. Google Analytics can slow down load time as it tries to gather significant data. How will this be folded into overall operation of a site?

To Sum Up

Slow page-loading leads to loss of viewers. Studies have shown this to be true. Even if a site is very popular, users will drift away because of slow response times. It may take a week or two for them to build up the courage to come back. At this point it’s best to take a few basic steps such as reducing download size if possible, improving layout and minimizing round trips between pages.

We don’t need to panic about mod_pagespeed but then we probably shouldn’t be shouting from the rooftops either. The best path is somewhere between these two extremes.

A traditional sitemap is a rather simple and efficient way for a Web site visitor to find a specific page or section on a complex Web site. It’s best to start with a home-page link then offer a list of links to main sections of the site. Those sections can offer details to pages. Be absolutely sure the links are accurate and take the visitor to the exact location described.

I n addition, it’s generally best to provide one page (sitemap) where search engines can find access to all pages. A search engine sitemap is a tool that the Web designer or administrator uses to direct search-engine spiders based on frequency and order within the Web site.

n addition, it’s generally best to provide one page (sitemap) where search engines can find access to all pages. A search engine sitemap is a tool that the Web designer or administrator uses to direct search-engine spiders based on frequency and order within the Web site.

So, when we see the term “sitemap” we are fairly sure we know what we are dealing with. The term is very descriptive of the job that this online tool is designed to do. But what about sitemaps for search engines? How do they work? In what specific ways can sitemaps help both developers and users?

Ready-Made Sitemaps for Search Engines

Several companies offer online tools that will “spider” the Web site and create a search-engine sitemap. Some of these services will work with hundreds of pages without charge. If you require the spider to work with more than 500 pages you will have to pay a basic fee. Most of these tools come with instructions on how to apply the sitemap to your site. Once the sitemap is in place you will need to submit it to search engines that will “spider” it.

This gets us to the point at which we have established a multiple-sitemap strategy. Our first, traditional sitemap is created so that visitors to your Web site have a good resource for finding their way to your content. The traditional sitemap comes with an added benefit: keyword weight to the linked pages.

Our strategy doesn’t stop there, however. The search engine sitemap lets the most-used search engines know which pages should be part of their databases. Organization and frequency are the keywords here!

Video Sitemap

We know the standard sitemap will help visitors find their way to the quality content on your site and the search engine sitemap is the key to an efficient and successful sitemap. But you may want to consider additional assistance for visitors that uses video?

When your well-designed site includes links to video players, links to other video content associated with your site and, of course, video on your own pages, you need to make sure that the major search engines (and visitors) can find what they want and need. A search engine bot will only find your video if you include all the necessary information: title and description; URL for the page on which the video will play; URL for a sample or thumbnail; other video locations, such as raw video, source video etc.

You shouldn’t be too concerned about how much space you’re taking up with video sitemaps because many tools and programs that help you establish video sitemaps will handle thousands of videos. Keep in mind that there will be limits such as a compressed limit expressed in megabytes.

We’ll use Google as an example of formats that will work for video crawling: mpg, .mpeg, .mp4, .m4v, .mov, .wmv, .asf, .avi, .ra, .ram, .rm, .flv, .swf. All files must be accessible through HTTP.

Mobile Sitemap

No, the sitemap doesn’t move! This Web site guide includes a tag and namespace language specific to its purpose. The core of the mobile sitemap is standard protocol but if you create yours with a dedicated tool make sure you can create mobile sitemaps.

This guide uses only URL language that focuses on mobile Web content. When the search engine crawls here it will not pay attention to non-mobile content. In a multiple-sitemap strategy you will need to create a sitemap for non-mobile locations. Make sure you include the <mobile:mobile/> tag!!

See a sample of a mobile sitemap for Google at:

http://www.google.com/mobilesitemap.xml or check other search engines to see what you need to do to make this work as it should. Submit mobile sitemaps as you would others.

Geography and Sitemaps

As with other targeted sitemaps, the subject of targeting certain countries with your sites can consume much more time and space than we have here. But here are some of the basics to get us started.

You can target specific countries when you set up your plan with services such as Google Web Tools. This is accomplished when you submit an XML sitemap. The bottom line is that you can submit multiple sitemaps on one domain.

User experience shows that Web site rankings increase by 25 percent or more for organic traffic. With careful SEO effort on each of the language versions the results could be even more impressive.

Submit and Grow

To wrap up this very brief look at the subject of sitemaps, let’s consider a few of the top places for submitting your sitemaps. Keep in mind that search-engine technology has changed and improved so you will need to be familiar with the standards and requirements to be successful.

To wrap up this very brief look at the subject of sitemaps, let’s consider a few of the top places for submitting your sitemaps. Keep in mind that search-engine technology has changed and improved so you will need to be familiar with the standards and requirements to be successful.

Don’t limit yourself to the idea of submitting one URL and having the crawler do the work. This isn’t the most efficient way to get where you want to go. Devote some time to learning about submitting to Google, Bing, Ask.com, Moreover, Yahoo Site Explorer and Live Webmaster Center among others. These seem to be the top destinations in the opinion of most industry observers.

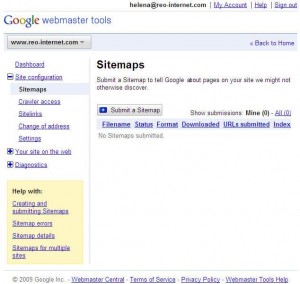

The process of submitting a sitemap differs slightly from one place to another but in general terms you use the “configuration” link and choose “sitemaps.” You’ll need to enter your complete path description in the space provided. Google’s example might look like this- http://www.example.com/sitemap.xml, type sitemap.xml) and choose “Submit.”

You should get confirmation and even a screen shot to show what you have accomplished.

Good luck!

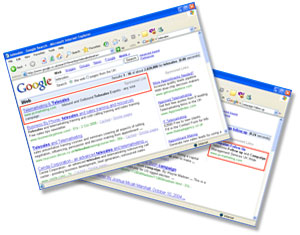

Recently there was some discussing about Google’s new search engine results page, offering the ability to see a preview of the websites within the results. Here is one of the original posts, it appears that Google is now testing or have released this in the United State results.

In reality this is not a new idea, they are basically borrowing the idea from Search Me

I do like the idea of letting users view the quality of a website before clicking, wonder if they plan on adding this to PPC? I would change the whole game.

It’s all about the details. The application provides more detailed information for each search query. A site’s pages were reported using average position. Updates introduced in the past year allow users to find the number of “impressions” and the “amount of clickthrough.” Impressions are the instances that the site appeared in search results. Clickthrough measures the “number of times searchers clicked on that query’s search results.”

It’s all about the details. The application provides more detailed information for each search query. A site’s pages were reported using average position. Updates introduced in the past year allow users to find the number of “impressions” and the “amount of clickthrough.” Impressions are the instances that the site appeared in search results. Clickthrough measures the “number of times searchers clicked on that query’s search results.”

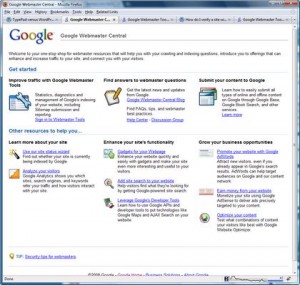

Webmaster Tools was designed to provide “information and data about the sites you have added to your account. You can use this data to improve how search engines crawl and index your site’s content.” That’s the basic task for this tools application, as described by Google. But there is more to this than what we have become used to.

The Webmaster Tools now generates charts and displays them in the report page. Query information can be isolated for a specific period of time as well. Now, when a site ranks for more than 100 queries there will be new buttons that allow Web managers to find out more details. The improvements should save time and money if used correctly.

For those who have been using Google Webmaster Tools the addition of new backlink statistics will be a nice change. Everyone who wants to succeed in the online world should know about links and linking, whether they use them fully or not.

But not all links contribute to Web success. Proper linking does. If you can get a handle on the idea of backlinks you are even more likely to have a positive experience with your sites. You see, backlinks are essential in the search-engine visibility and ranking world. The key word in the new online community is conversation.

If you get reliable, usable links from pages that are relevant to what you are doing is very important. Webmaster Tools now has a piece that helps you keep track of who is linking to whom, with details such as pinpointing the page on the referring site. This information is now offered in a format that is easier to use. Check into Webmaster Central Tools (sitemap) if you are a registered Web site owner.

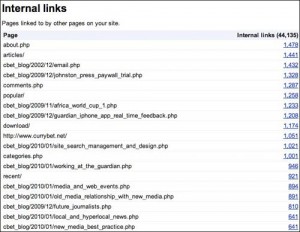

Internal Links – Count per page tells you which pages are linked to from pages on your Web site and also shows the number of links on your Web site that point to each of the linking pages. Some of the newest linked pages may not appear right away, perhaps because your link isn’t set up properly.

Internal Links – Count per page tells you which pages are linked to from pages on your Web site and also shows the number of links on your Web site that point to each of the linking pages. Some of the newest linked pages may not appear right away, perhaps because your link isn’t set up properly.

Internal links – Details for valid links from inside your domain or Web site.

External Links – Count per page tells you which pages from your Web site have a link from another domain and number of links pointing to that page.

External Links – Details for links on pages at another domain that point to your site. URL is displayed along with the date that Google last crawled the link.

These reports can be downloaded and used in many search-engine optimization tools.

Analysis is very important in the successful management of Web sites. One of the key tools is page analysis, which includes details on the important keywords Google found on your site. Words are provided on a priority list. In addition, common words for those external links are also reported.

Google Webmaster Tools has also provided query stats for some time. For example, you can access top-search queries, which are chosen by search property most often returning pages from your Web site. You can learn the top-search query number of clicks to your pages as well as the average top position. Google describes this as the “highest position any page from your site ranked for that query.” Keep in mind that data in these reports is given as a seven-day average.

Google also added search capability that allows Web administrators to “search through your site’s top search queries so that you can filter the data to exactly what you’re looking for in your query haystack.”

Some Web experts and Google-watchers find the data/information features to be a bit overwhelming. It seems the Web site administrator or Webmaster will have to use some trial-and-error methods to find out which numbers and reports are most useful.

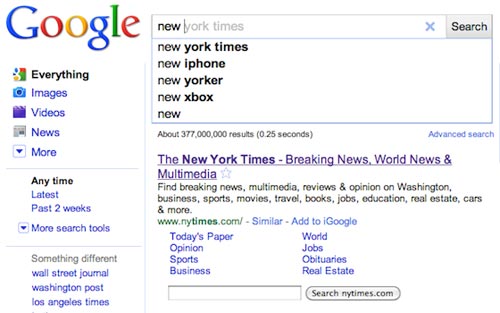

Regular observers of Google and the world of Internet searching call Google Instant Search an aggressive attempt to define the way people use the Internet. The year 2010 may indeed be remembered by some as the year in which this Web-use behemoth redefined searches.

Is this a truly fundamental change? Earlier this year an article in one of the leading technology magazines heralded the end of the Internet as we know it. That article stated the belief that future Internet use will be about applications rather than about searching and finding. This basic change would takes users from spending time on the search process to arriving and beginning to use the outcome much more quickly.

Is this a truly fundamental change? Earlier this year an article in one of the leading technology magazines heralded the end of the Internet as we know it. That article stated the belief that future Internet use will be about applications rather than about searching and finding. This basic change would takes users from spending time on the search process to arriving and beginning to use the outcome much more quickly.

But does Google Instant Search refine the use of the World Wide Web so completely? Do we, as users, actually arrive at applications instantly? Let’s see what veteran Google-watcher Tom Krazit has to say about this. Keep in mind that Krazit has followed Google on a consistent basis because he considers it “the most prominent company on the Internet.”

Some of the key points Krazit makes about this new search technology are:

This last factor may be the most important one of all. In the minds of millions of Internet users the word Google is a verb. We don’t “search” the World Wide Web for information. We “google” it. This is a key point made by Krazit and others. Krazit sees Google Instant Search as “a combination of front-end user interface design and the back-end work needed to process results for the suggested queries on the fly.” This is quite different from what other major Internet players have done or will do.

It’s Still Great, Right?

The benefits of Google Instant Search may seem obvious but there are some search aficionados who have questions and concerns about the idea. The technology makes predictions and provides suggestions as to what a might be searching for. These features have cut typing and search time by 2 to 5 seconds, according to Google.

That might seem like great stuff but not for everyone is completely sold. Some people still want to turn this feature off and this can be done through the “Search Settings” link. The company states that this doesn’t slow down the Internet process and adds that the experienced user will welcome the efficiency of Google Instant Search, especially because it doesn’t affect the ranking of search results.

As quick and efficient as Internet searches already were (even a few years ago) Google Instant Search came along as an effort to make the process move more quickly. In its simplest terms, this technology is designed to take viewers to the desired content before they finish typing the search term or keyword. Individuals certainly don’t have to go all the way to clicking on the “Search” button or pressing the “Enter” key.

Logical Extension

Rob Pegoraro, writing for The Washington Post, states that Google Instant Search is the “next logical extension” of what Google calls the “auto-complete” feature. The journalist does comment, correctly, on our “collective attention span online” and notes that the need for and use of instant-searching tools seems a bit frightening.

Rob Pegoraro, writing for The Washington Post, states that Google Instant Search is the “next logical extension” of what Google calls the “auto-complete” feature. The journalist does comment, correctly, on our “collective attention span online” and notes that the need for and use of instant-searching tools seems a bit frightening.

One thing parents and Web do-gooders won’t have to worry about is the appearance of offensive words and links to obscene destinations. Google has provided filtering capability that will prevent the display of the most common “bad” words, for example.

Pegoraro also expressed the hope that saving 2 to 5 seconds per search doesn’t become “Instant’s primary selling point.” Instant Search continues one of the features of earlier search tools in that it tailors things to the location of the user who has signed in. This certainly sped up the process without Instant Search so it should do the same and more now.

Making Connections

In 2009 a fellow named Jeff Jarvis wrote a book about Google that put the Internet giant in a special place among the Web-users of 21st century. His book carried the title “What Would Google Do?” If this title gives the impression that one Internet company is guiding the online world it’s because Google does this.

A key issue in Jarvis’ book is the connection made between users or between users and providers. Google Instant Search brings the provider/user connection to the conversation level. In a way, this technology mimics how we actually converse with friends and family members. As we construct a sentence and speak it, the listener is already forming thoughts about our subject or point.

Google Instant Search does this and we respond quickly with adjustments and new information. The search tool forms its “opinions” and makes suggestions again. It’s sort of like that annoying guy who finishes our sentences for us and this may be a problem for many long-time Internet fanatics.

With all of this in mind, we should understand that Google’s management team and developers spent a lot of time testing this technology before introducing it. Yet, it isn’t perfect by any means. Slower Internet connections may struggle with Instant Search. As mentioned earlier, the change has caused some concern among those who make their living with search-engine optimization.

One more question: Is Google Instant Search perfect for mobile use? We’ll find out over the next few months.

Google Ad Innovations was launched at the end of March as a lab for ad products it is considering. It’s designed to help AdWords account holders experiment with ad technologies and get their feedback about the new products. They may or may not be released for real in the future, depending on how they test out among AdWords users.

Search Funnels was launched a couple of weeks ago. It slices and dices your search, conversion, keyword, and number of steps preceding conversions so you can figure out which ads are getting the most conversions and why. It’s actually pretty complicated, but there’s a video at the official AdWords Blog that gives you a good overview.

Search Funnels was launched a couple of weeks ago. It slices and dices your search, conversion, keyword, and number of steps preceding conversions so you can figure out which ads are getting the most conversions and why. It’s actually pretty complicated, but there’s a video at the official AdWords Blog that gives you a good overview.

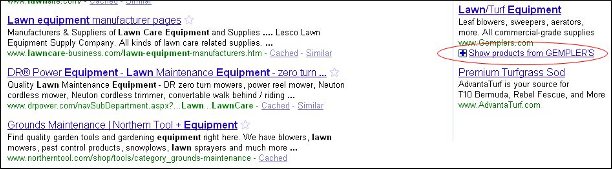

Google has long been known for text-based CPC ads, but have been busy coming up with ad models based on these text ads. The new ad models are product listing ads, comparison ads, and ad extensions, which consist of sitelinks, product extensions, video extensions, location extensions, multiple addresses for location extensions, and click to call phone extensions. Let’s go through each of these.

Product Listing Ads are in limited beta release right now. They include more product info, like images, prices, and merchant names without requiring additional keywords or text. So when someone enters a search query relevant to something in your Google Merchant Center account, Google may or may not show the most relevant products with the associated price, product name, and image. These are only charged on a cost per action (CPA) basis, so you only have to pay when someone actually buys something from your site.

Comparison Ads are also in limited beta release. These let users compare several relevant offers and work on a cost per lead format. With these, the users do not have to fill in forms, and Comparison Ads doesn’t send advertisers any kind of personally identifiable user stats. Right now this is being used for the credit card and mortgage loan industries in select locations. Comparison ads should let advertisers target offers more accurately in order to get more leads.

The suite of Ad Extensions lets users make ads more relevant and more useful. They expand on the concept of standard text ads, letting viewers have the option of getting additional information right in the ad without leaving the search page. These extensions work with existing text ads without requiring changes to bids, ad text, or keywords. Following are the types of Ad Extensions offered.

Ad Sitelinks lets advertisers extend the possibilities for existing AdWords ads, providing links to specific content deeper within your site’s sitemap. So rather than sending all users to the same landing page, Ad Sitelinks will offer up to four more destination URLs for potential customers to choose from. You can use ad sitelinks to direct visitors to specific parts of your site, such as promotions, store locators, and gift registries. Early users have reported improvements in clickthrough rates of up to 30%.

Product Extensions are in limited release and allow you to add more to your existing AdWords ads with specific product information on the merchandise you sell. It uses your existing Google Merchant Center account to highlight products relevant to the user’s query. They can also show pictures, titles, and prices of products. In the first screen shot, you’ll see the “plus box” under the ad indicating the product extension. When a user clicks on that plus box, lots of products, along with thumbnail pictures show up, as you can see in the second screen shot. You’re charged the same CPC whether the user clicks on your main text ad or any offers in the product extensions box. But if a user simply expands the box without clicking through to the site, you’re not charged.

Video Extensions are in limited beta release and allow you to engage prospective customers with video content. The video extensions are in an expandable plus box under the standard text ad. If the user watches the ad for 10 seconds or more, you’re charged based on your maximum cost per click bid of your text ad. After vieing the video, the user can click the URL link in the ad or go directly to your site with no extra charge to you. These are great for movie trailers and product usage instructions.

Location Extensions attach your business address to your ads. Your ad can also contain your business’s name, phone number, and address, promoting your business and its products and services with a specific location that’s of interest to the user. It’s good for drawing in local customers.

Multiple Addresses for Location Extensions, also in limited beta release displays a plus box under ads at the top of the page. Whenever a user clicks on that plus box, he or she sees a map that shows store locations near them, plus a search box for moving the map around. This feature shows as many stores as are relevant to a given search, increasing the chance of picking up local customers.

Click to Call Phone Extensions are for people searching for products and / or services from their smart mobile phone (like the iPhone, Android, or Palm Pre). This feature has been fully released. If someone finds your business on a search and would rather call you than visit your website, they can use a click to call phone extension they’ve found in ads on their mobile devices that have full internet browsing capability. You’re charged the same for a customer’s call as you would had they clicked on your website.

In order to understand the rise of paid content, it’s necessary to understand the meaning of the nofollow tag and how it is used (and some would say abused) by large sites like Twitter.

The nofollow tag is used to tell some search engines (*cough*Google*cough*) that a hyperlink should not influence the link target’s search engine ranking. It was originally intended to reduce the effectiveness of search engine spam. Spam comments were the nofollow tag’s original targets: spam comments on blogs were used to get back links and try to squeeze a few drops of link juice from as many places as possible. By making comment links nofollow, the webmaster is in effect saying, “I am in no way vouching for the quality of the place this link goes. Don’t give them any of my link juice. Maybe it’s a good site, but I’m not taking chances.”

Nofollow links are not meant for preventing stuff from being indexed or for blocking access. The ways to do this are by using the robots.txt file for blocking access, and using on-page meta elements that specify on a page by page basis what a search engine crawler should (or should not) do with the content that’s on the crawled page.

Nofollow was born in 2005, and since that time, in the SEO arms race between the search engines and those who want to game them, websites started selectively using the nofollow tag to “sculpt” page rank for pages within their own site. In other words, a link going to an internal page that was ticking over nicely could be made into a nofollow link in an attempt to “conserve” PageRank juice to give to another internal page that was just starting out, or struggling, and needed some help.

Well, Google frowns on this, insisting that you’re better off in the long run to use links to your site’s pages but not to selectively use the nofollow tag in an attempt to juice up the pages you think need a boost. According to Matt Cutts, the only time you should use nofollow is when you cannot or don’t want to vouch for the content of a site. An example would be a link added by an outside user (say, in a comment thread) that you don’t trust. Cutts suggested that unknown users leaving links on your guestbook page should automatically have their links nofollowed.

Right, so what does this have to do with paid content?

Paid content companies take advantage of Google’s emphasis on domain authority, by buying up trusted sites like eHow (purchased by the seemingly insatiable Demand Media) and dumping lots and lots of esoteric content into it. Why do they do this? They get the domain authority, and the esoteric content helps ensure that when someone, somewhere searches for an article on, say, how to make a butterfly shaped cake, the content that they paid a content writer a couple of bucks for will show up at the top of the search engine results. In other words, they’re targeting the proverbial “long tail.”

How do these sites know what content to buy? They have algorithms that comb through keywords and keyword combinations and determine where there are gaps in information. Then the content buyers commission writers to write content specifically to fill those gaps. You may have heard the statistic that 20 to 25% of queries on Google have never been searched before. That’s a huge, huge number of queries. The more of those queries you can anticipate and answer, the more hits your site will get over the long term.

While link spam and comment spam were clear attempts at short term efforts for sites to claw their way to the top of the search engine rankings, and were relatively easy to squash using nofollow tags, paid content is more of a long term strategy, and it’s not clear what, if anything Google can do about it.

What seems to be happening is that sites like Twitter are kneeling down before their Google overlords (as one side of the story goes) and automatically making even the most harmless links (such as your own link to your own website on the “Bio” part of your Twitter profile) nofollow links. That has seriously ticked off a lot of long term Twitter users who legitimately poured in lots of very real, original content and can now no longer get any link love from that Bio link, even though it’s from them, to their very own site. When this happened, the metaphors about Google and Twitter ran rampant: “throwing the baby out with the bathwater,” “shutting the barn door after the horse gets out,” “cutting off its nose to spite its face,” etc.

The strategy seems to be that if nofollow links are being used as they were intended (well, as Google intended anyway), sites that are all promotion and no content would have a harder way getting to the top of the search engine results pages. Google’s fear is that paid content will game the system when it comes to odd or unusual searches, and the person who really does devote his life to making the world’s best butterfly-shaped cakes will lose out to the paid content sites who had writers or videographers hack together a 5-step instructional page or video.

Whether it will work or not is yet to be seen. As for now, paid content sites are doing pretty well for themselves. And the search engines that cater to them, like Ask.com, which wraps a few “real” sites in with sponsored results, are doing pretty well too. From February 2010 to March 2010, Ask.com’s share of search engine traffic went from 2.84% to 3.44%, while the traffic for the other (and admittedly much larger) search engines stayed relatively flat. Have a look at the screen shot of Ask.com’s results for “How do I bake a butterfly shaped cake” to see for yourself the influence of paid content on this search engine.

Most of us have noticed the new and improved Google search results pages by now. The biggest thing (in my opinion) is the new left hand column you get when you click the new “Show Options” link at the top of the results page. Whereas before if you wanted to search from a certain time frame, or make other refinements to the search, you had to go to the Advanced Search options page, now you can do a lot of those refinements right from your original results page.

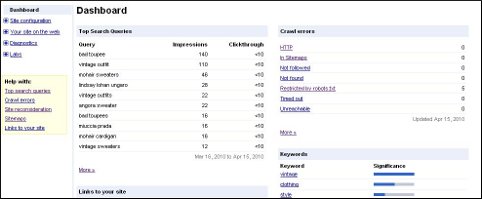

Have you opened up Google Webmaster Tools recently? If not, you should, because you’ll find a bunch of new features. Your webmaster tools dashboard shows top search queries, links to your site, crawl errors, sitemaps, and more, as you can see in the screen shot. From the dash, you can get more information on any of these. The next screen shot shows all the webmaster tools options that show up in the left hand column of your dashboard.

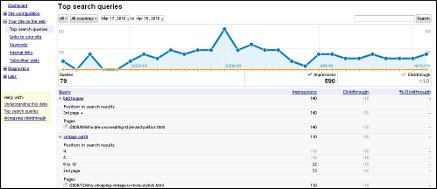

If, for example, you’re looking at your top search queries, which is located under the “Your Site on the Web” menu, you can go down to the bottom of the list where it says “more” and click. You’ll be taken to a page with a graphic representation of your queries by date, and a breakdown of individual search terms, and where you rank for them, as seen in the next screen shot. Also under “Your Site on the Web” you can find links to your site, keywords, internal links, and subscriber statistics.

You used to only be able to see your site’s top 100 search queries, but now you can see many more. If your site ranks for more than 100, you’ll see page buttons at the bottom of the “Top Search Queries” list. And the graphic that appears of your top search queries used to be something you had to download and make into a chart yourself, so this new feature is especially handy. You also get a date range selector like what you have on Google Analytics so you can narrow down your data for a particular time period.

Under the “Site Configuration” menu, you can visit sitemaps, crawler access, sitelinks if any. These are the internal links that Google sometimes puts in search results, as you can see in the screenshot. There is also a “settings” section, in which you can set geographical targets, view your crawl rate stats, and parameter handling, which some webmasters use for more efficient crawling with fewer duplicate URLs. You don’t have to set any of these, because there are defaults, but some webmasters may want to. Some people have raised concerns that having country-specific sites will cause duplicate content issues, but this isn’t the case, so setting geographical targets will not harm your rankings.

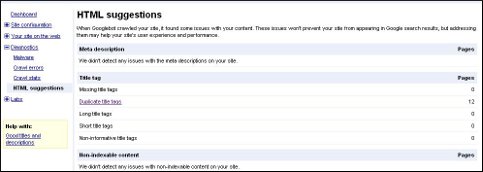

Diagnostics is another handy set of features. From the Diagnostics menu, you can check for malware on your site, crawl errors, crawl stats (which gives you Googlebot activity over the preceding 90 days), and HTML suggestions. The HTML suggestions page tells you if there are any problems with malware on your site, and a breakdown of title tag information, which includes duplicate or missing title tags, short title tags, non-informative title tags, and long title tags. It also alerts you if there is non-indexable content on your pages. Just FYI, the Malware diagnostic used to be under the “Labs” menu, but late in 2009 it “graduated” to the Diagnostics menu.

The Labs menu lets you play with new tools that may or may not stick around. One thing you can do is see what your site (either the homepage, which is the default, or a specific page, whose URL you type in) looks like to the Googlebot by choosing “Fetch as Googlebot” under the Labs menu. Once you click on “fetch” you’ll get a listing of your site and under Status, you should see “Success” and a green check mark. Click on “Success” and you’ll see the source information for your page as it is seen by the Googlebot.

You can make a Sidewiki for your site under the Labs menu, too. It may not be something you’re interested in, but a Sidewiki is a browser plugin for IE and Firefox that adds a universal commenting system for any and every page on the internet. To use it, you have to install the Google Toolbar for IE or Firefox, and Google says they’re working on a version for Chrome. When you install the toolbar with the Sidewiki in it, you go to a landing page telling you how to use it. Sidewiki entries are displayed with the most useful and high quality entries first, based on running the Sidewiki information through an algorithm to determine this. Toolbar haters will probably want to skip this one.

The Site Performance option under the Labs menu basically leads you through installation of the Page Speed browser add-on for Firefox that helps you figure how fast your site loads and what you can do to maximize load speed. Page Speed runs a bunch of diagnostics against a page and analyzes various performance parameters based on things like resource caching, upload and download sizes, and client-server round trips. You’ll get a red / yellow / green grading scheme for all the parameters, plus specific suggestions for making your page faster. It also does some automatic optimization such as compressing images and “minifying” JavaScript code.

This is very valuable information, and there are even suggestions and hints on how to do things like speeding up your site by installing a Firefox add-on called Page Speed. Honestly, you could spend half a day going through the data you now get from Webmaster Tools and probably come up with a dozen tweaks to your site that will fine tune its performance. Initial reaction to the new tools – particularly the top search queries – has been very positive.