has 13+ years experience in web development, ecommerce, and internet marketing. He has been actively involved in the internet marketing efforts of more then 100 websites in some of the most competitive industries online. John comes up with truly off the wall ideas, and has pioneered some completely unique marketing methods and campaigns. John is active in every single aspect of the work we do: link sourcing, website analytics, conversion optimization, PPC management, CMS, CRM, database management, hosting solutions, site optimization, social media, local search, content marketing. He is our conductor and idea man, and has a reputation of being a brutally honest straight shooter. He has been in the trenches directly and understands what motivates a site owner. His driven personality works to the client's benefit as his passion fuels his desire for your success. His aggressive approach is motivating, his intuition for internet marketing is fine tuned, and his knack for link building is unparalleled. He has been published in books, numerous international trade magazines, featured in the Wall Street Journal, sat on boards of trade associations, and has been a spokesperson for Fortune 100 corporations including MSN, Microsoft, EBay and Amazon at several internet marketing industry events. John is addicted to Peets coffee, loves travel and golf, and is a workaholic except on Sunday during Steelers games.

This post assumes that you know what Twitter is (a place where you can post 140-character microblog posts) and how to get an account (Just go to Twitter.com and it will tell you how). Twitter is becoming a more important in the world of SEO because now Google is showing “real time” search results from there, as you can see in the screen shot. When you see Twitter results in a Google results page, they update in real time. However, there is a “pause” link at the top of the Twitter results so you can freeze them long enough to get a better look.

Something that can be very helpful when you are designing and refining your website is knowing what it “looks like” to the bots that crawl the web and index your pages. If your site doesn’t have the information that the bots need to know what your content and graphics are all about, then they can’t do a very good job indexing your pages.

If you use Firefox, you can download and install the “User Agent Switcher” option for Firefox. You’ll have to restart Firefox once you’ve installed it. Once you have it, in Firefox, go to Tools, then User Agent Switcher, then Options, then Options again. In the User Agent Switcher window that comes up, select User Agents and click on “Add.”

In the Description box, type something like “Google Bot” and in the User Agent box, paste this:

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

In the App Name box type Googlebot, then click OK. Now, any time you want to view one of your pages as if you were the Google bot, you go to Tools, User Agent Switcher, Googlebot.

You might have to block cookies to view some sites, and you can do this in Tools, Options, Privacy, Exceptions (then add the URL).

Another thing you can do is to use a text browser like Lynx to get a rough estimate as to how your site looks to Google. Google Webmaster Tools, however, has a feature that can help too. On the Webmaster Tools dashboard, click on the “+” sign by the “Labs” link in the left hand column. When you do, you’ll see an option called “Fetch as Googlebot” as you can see in the first screen shot. Click on it, and it will download your site (or whatever URL you enter) as the Googlebot sees it.

As in the second screen shot, you’ll see the html source just like that you’ll see when you click on “View Source” in your browser. You’ll get a response code, like 200, which means everything is peachy, or 301, which means “permanent redirect.” You’ll see what kind of server your website is on and any CSS files or scripts that are called upon and included.

One caveat, however is that it doesn’t always work with PDF files, but Google insists it’s working on fixing the problem and if your sites look OK in your browser, chances are it looks OK to the Googlebot (even if it’s PDF).

If you run a lot of scripts or have lots of layers on your sites, this can be particularly handy. If your site is mostly simple html, your normal web browser will give you a pretty good idea of what Google sees on your site.

When the Googlebot crawls your site, it uses computer algorithms to determine which sites to crawl, how often to crawl them, and how many pages to get from each site. It starts with a list of URLs from earlier crawls and with sitemap data. The bot notes changes to existing sites, new sites, and dead links for the Google index. When the Googlebot processes each page it takes in content tags and things like ALT attributes and title tags. Googlebot can process a lot of content types, but not all. It cannot process contents of some dynamic pages or rich media files.

When the Googlebot crawls your site, it uses computer algorithms to determine which sites to crawl, how often to crawl them, and how many pages to get from each site. It starts with a list of URLs from earlier crawls and with sitemap data. The bot notes changes to existing sites, new sites, and dead links for the Google index. When the Googlebot processes each page it takes in content tags and things like ALT attributes and title tags. Googlebot can process a lot of content types, but not all. It cannot process contents of some dynamic pages or rich media files.

There has been plenty of talk about how to handle Flash on your site. Googlebot doesn’t cope well with Flash content and links that are contained within Flash elements. Google has made no secret about its dislike for Flash content, saying that it is too user-unfriendly and doesn’t render on devices like PDAs and phones.

You do have some options, however, such as replacing Flash elements with something more accessible like CSS/DHTML. Web design using “progressive enhancement,” where the site’s designs are layered, yet concatenated, will allow all users including the search bots to access content and functions. Amazon has a “Create your Own Ring” tool for designing engagement rings that is a good example of this type of functionality. Also, something called sIFR, or Scalable Inman Flash Replacement is an image replacement technique that uses CSS, Flash, or JavaScript to display any font in existence, even if it isn’t on the user’s computer, as long as the user can display Flash. Now, sIFR is officially approved by Google.

Google says that the bottom line is to show your users and Googlebot the same thing. Otherwise your site could look suspicious to the search algorithms. This rule takes care of a lot of potential problems, like the use of JavaScript redirects, cloaking, doorway pages, and hidden text, which Google strongly dislikes.

Google support engineers say that Google looks at the content inside “noscript” tags, but they should accurately reflect the Flash-based content included in the noscript tags, or else Googlebot may think it’s cloaking.

According to Google engineer Matt Cutts, it’s difficult to pull text from a Flash file, but they can do a fair job of it. They use the Search Engine SDK tool that comes from Adobe / Macromedia. Most search engines are expected to make that the standard for pulling text out of Flash graphics. People who regularly use Flash might consider getting that tool as well and seeing for themselves what kind of text it pulls out of your graphics. In fact, Google may work with Adobe on updates to the tool.

It isn’t hard to find people in the mainstream media (which, for good or ill, really is dying) to say that the so-called Long Tail is bunk, and blogging was a fad. Sure, a few years ago, everyone was starting blogs all over the place, and as a result, there are abandoned blog carcasses littering the internet. I know this because some of them are mine. Blogging was an untried, untested medium, and quite naturally, when people saw how easy it was to set up a blog, they set up millions of them. The line of thinking for lots of people (myself included, admittedly) was, “This is awesome! I can have a blog for my personal stuff, a blog about work, a blog with pictures of my kids and pets, and a blog with recipes,” etc.

Setting up a blog is easy. Keeping a blog over a period of years is hard. So the “Blogging is Dead” pundits have a point.

In Part I, we talked about how to determine if your site has been banned or penalized by Google and what to do about it. This part delves more into Google penalty folklore, and how the search engine is constantly changing and evolving to counter nefarious work-arounds that people develop to game the search engine world.

In August and September 2009, Google made changes that demote a site by 50 places in the rankings if you are penalized. At this time, variation of anchor texts grew in importance even more than it had in previous years. The “rules” of building natural anchor text change a lot. Not that you should stop using natural anchor text. More on that later.

The so-called “minus 50 penalty,” a filter in Google operates on the domain, page, and keyword level. In other words, pages drop by 50 positions in the ranking because of over-optimization of keywords the page has been linked to, either internally or externally.

What does this mean? Un-optimize your keywords?

Actually, yes.

Since the moods of Google change fairly rapidly, the things that worked last year may not work now. If you’re hit with a penalty, all you can do is fix the problem, suck it up, and move on starting today. Various webmasters have said that it takes 60 days to get rid of the penalty, so the sooner you deal with it the better.

Something else you must do is change up your anchor texts. Don’t just use one hot keyword to link all your links. And you can’t just vary them singular and plural. You have to use everything from natural language phrases to pieces of keyword phrases to misspellings and typos. What you don’t want is to overdo the linking with the hot keywords and phrases. Write your anchor texts as if you don’t care about squeezing every last bit of juice out of a particular keyword or phrase.

When it comes to anchor text variety, your best bet for figuring it out is to check out what your best competitors are doing because there’s no exact number of times a keyword text can be used to anchor links. The key is not to be too far out of line when compared to your competition.

Page level penalties are becoming more common, whereas before, penalties were usually applied at the domain level. In many cases the page level penalties are hitting home pages of sites. Key phrase specific penalties are becoming more common too. This happens when there are easily detected paid links pointing to a page with exact anchor text for one key phrase. The problem is, the page can continue to get search traffic for some phrases, but not the one you want.

Apparently the reason Google does it this way is that sometimes Google susses out paid links, and sometimes it doesn’t. If the algorithm is going to hand out penalties for paid links, it needs to prevent itself from messing things up for a site too badly if the algorithm thinks there are paid links when there aren’t. Therefore, they limit the penalty to one specific page and one specific key phrase so that an entire site isn’t penalized in the even to of a mistakenly applied penalty. Of course, the best thing to do if you have paid links is get rid of them and wait for your site to be crawled again.

These page level and key phrase specific penalties are sometimes hard to detect, but there are some things you can do that might give you clues that your site is on the receiving end of a page level key phrase specific penalty.

The moral of this long story is that while domain level penalties appear to have peaked, you have to watch out for page level and keyword specific penalties. While Google’s intentions in doing this were probably honorable, these penalties can be a little harder to figure out than domain level problems, particularly keyword and key phrase level penalties. If you have paid links, shady links, or happen to link to a nice looking site that itself has questionable link issues, you need to fix these things now. It isn’t always easy finding out which of the sites you link to themselves link to porn or other dodgy sites, but it’s worth checking out. The bottom line is that if you shun all questionable practices, build links organically, and continue to provide fresh, relevant content with natural sounding anchor text for links, your site will bubble upward and is almost certain to resist getting penalized.

Has your website’s search engine ranking dropped drastically for no apparent reason? It is possible that your site has been hit by a domain level penalty from Google’s web spam team. A domain level penalty means your whole site has been demoted drastically in the search engine rankings – not just certain pages of it. The bad news is this can be difficult to pin down. While there are tools to help you figure out if your site has been penalized, there is a degree of speculation about it.

When your site’s Google search engine ranking takes a hit suddenly, then you can pretty easily conclude that you have done something wrong in the opinion of Google. They’re not all that specific about what happens when they “catch” you doing something wrong, and exactly how they penalize you. But in general, there are a few categories into which these mistakes fall, and there are ways to make a reasonable conclusion about which sin you’ve committed.

Cataloging the various Google penalties people have come across is like trying to herd cats. Whatever relationship exists between the punishment and the crime either isn’t transparent, or is not handled evenly across the board. Being banned is, of course, as bad as it gets, since your site is suddenly not able to be found. This usually only happens when some kind of serious deception is going on on the site. But there are a few activities that have been pinned down as sending the Google gods into a frenzy.

Domain level redundancy is one thing that can get you penalized. This is what happens when webmasters clone sites. In other words, they point the Domain Name System (DNS) from several domains into the same directory. This makes each domain display exactly the same site.

If you duplicate the same content over several pages or sites, or if someone copies your site or content, Google will ding you for content redundancy. If you think someone else is ripping off your content, check Copyscape and see if you can track it down.

Purchasing a number of domains, each of which address separate keyword targets is now considered punishable.

If Google thinks you’re a link seller, your links will rapidly mean zilch. If your links do not pass on PageRank juice, they’re worth less than the pixels they’re written on, even if you are using them on your own site.

There is a certain amount of mystery surrounding the Google “sandbox” theory. Some people believe that young sites are penalized. You don’t see this penalty unless you try to SEO the heck out of it within the first few months of the site’s existence. Apparently there is a certain amount of dues paying your site has to do before your SEO starts getting respect from the big guys.

Does your site support (or appear to support) porn, gambling, or “male enhancement” sites? Well, duh. Of course you’re going to get penalized. Same with spam.

If your site may be perceived as a threat to national security, like if you sell fake IDs or something, your site will be penalized. If a third party hijacks your search engine rankings by means of cloaking and proxy, unfortunately, your site gets hit with a penalty. This one is mostly Google’s fault. The same is true if an affiliate uses content from your site, or if your competitor intentionally links to you from “bad neighborhood” (porn, gambling, etc.) sites.

And, of course, there’s the fact that the Google algorithm isn’t perfect. Algorithm quirks can have the same effect as a penalty if it makes your site unreachable.

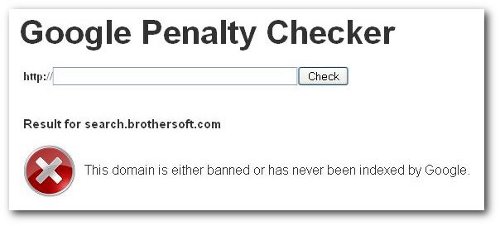

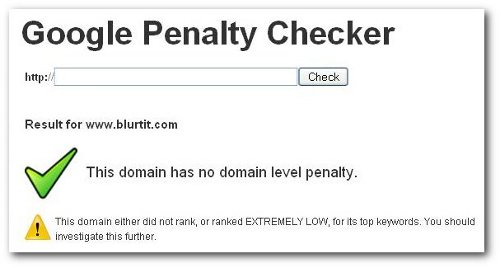

If you want to find out if your site has been penalized, we have a free penalty checker tool you can use if you go to http://tools.lilengine.com/penaltychecker/. Click the button below to give it a try.

You just input the address of the site you want to check out, and you’ll get a result either like screen shot one (when a site does appear to have been banned or penalized) or screen shot two (when a site is not believed to have been penalized).

If you suspect your site has been penalized, there are a number of steps you can take to find out if it has, and why. Once you fix the problems, you can request re-instatement by the Google search engine and eventually get back in its good graces.

On Jan 27, Google promoted its social searching algorithm from the laboratory to “beta,” which means it’s now a regular search option. Reactions so far are mixed. Social searching has been praised by those who care what their friends on Twitter think of the new iPad, and criticized by those who wonder how anyone could care what their Twitter friends thinks of the iPad.

To partake of this new morsel on Google’s search buffet, you need to sign into your Google account and update your Google profile to include links to your accounts on social sites like Twitter and Facebook. But the Facebook link is a little deceptive. The only thing Google can index from Facebook are the public parts of people’s profiles. There isn’t a way for Facebook users to make their status updates available to the entire web.

So Google won’t be searching it to see what relevant things you have to say about a topic one of your Facebook friends has researched as of now. But if there is something relevant in the public part of your Facebook profile, it can use that. Somewhat ironically, FriendFeed, which is now owned by Facebook, is geared toward public information sharing, so if you are on FriendFeed and modify your Google profile to reflect this, that content will eventually become available.

Search engine optimization (SEO) and link building are two of the most important things that determine the success of your website. You may wonder if you should work the SEO in house, or if you should outsource it from the start. There are a couple of situations in which you would be better off outsourcing from the get-go:

But for many website owners, doing SEO and link building in-house is a good first step. Sometimes it can be done perfectly well in-house, but if not, you can always hire an SEO expert if you need one. If you know the general ideas behind SEO and the importance of link building, there is a ton of free guidance online, much of it provided by Google, the search engine that much SEO is directed at.

Link building is one of those things that can’t be rushed too much. You don’t need loads and loads of links, but you need a few high quality back links that have no association whatsoever with spammers. Search engines do not take link spamming lightly. They will either demote your site in the rankings or kick it out of their indexes altogether and make you basically start over and prove to them that you’ve reformed your ways before letting you back in.

The sites that provide links to you give search engines context about your site’s content, and can give the search engine algorithm indications of its quality. The links have to be relevant, however. A link from a great website that has absolutely nothing to do with your site’s content won’t do you much, if any, good. Link exchanges and partnerships that are developed strictly for the sake of providing back links – relevant or not – dilute the quality of the links and violate Google’s webmaster guidelines. Your search engine rankings may drop drastically as a result of using these schemes. Links need to be obtained the old fashioned way: by earning them with good, unique content.

But while back links are important to your search engine ranking, they aren’t everything. After all, a brand new site may contain great, original content, but may not have had time to get any back links yet. If this is the case, there are many other things the site owner can do to boost search engine results while those back links develop organically.

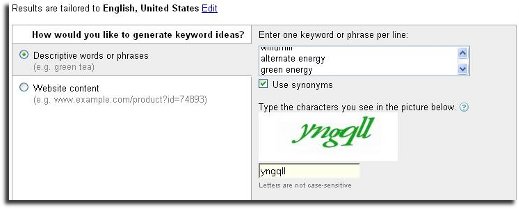

Keywords are very important. If you are not sure which keywords you should use, then Google has a keyword tool that can help you. Suppose my website was about “green” energy products. That’s a hot topic these days, so keywords are going to be competitive. How can I choose the best keywords? As you can see in the first screen shot, I used the Google keyword tool to research the three keywords “windmill,” “green energy,” and “alternate energy.”

The second screen shot shows just part of a huge list of suggested keywords for my site. Now, if I choose keywords that have enormous monthly search volumes, I’m going to be competing with a lot of other sites, and with those keywords, I’ll probably inch my way up the search engine rankings at best.

The “sweet spot” I’m looking for is keywords that are relevant to my site that have search volumes in the tens of thousands rather than the hundreds of thousands per month. The keywords “windmill energy” and “windmill electricity” look promising. They come in at around 10,000 searches locally and globally, so I have a better chance of competing, and yet they’re keywords that are relevant and could eventually become competitive, hopefully after my site has worked its way to the top of the search engine rankings.

Another way to use the keyword tool is (looking at the first screen shot again) is with website content I like. I would search for a high ranking website about green energy and feed that site’s url to the keyword tool. The keyword tool will then use the content of that site to generate a list of keywords.

There are a number of other things you can do to get your SEO process going in-house. Submit a sitemap to the search engines so that their bots will be able to crawl your site easily. Make sure your site has an understandable hierarchy and that each page is reachable from one or more static links. Use relevant keywords in your image metatags. And ensure that your title elements and ALT attributes are descriptive and accurate. Fix or remove broken links. Make sure each page has a reasonable number of links. One hundred or more links are too many. Keep it down to 10 or 20.

These practices won’t take an inordinate amount of time, and Google has a lot of webmaster tools that show you exactly what to do to improve your site’s search engine rankings. If you try the basics and your site does not improve in the rankings, and you have fresh, original content, then it may be time to call in an expert on SEO. But in a lot of cases, if you approach it methodically you can do wonders for your site’s search engine rankings (and Google PageRanks) in-house. In fact, if you have summer interns, it would be a great project for one of them.

Lil Engine, an established resource of information on SEO and internet marketing, is excited to release OSC, Organic Search Certification, as a way to verify and recommend professional SEO companies.

January 27, 2010 Winston-Salem, NC Furthering their ongoing effort to provide useful information and tools to site owners, Lil Engine now offers a certification for SEO Companies. OSC, which stands for Organic Search Certified, is a vetting process to verify the professionalism and quality of a company’s SEO techniques. Unfortunately, in the SEO industry there are a significant number of businesses that either knowingly or unknowingly are not performing quality SEO work. Concerns when choosing an SEO company range from whether or not they can achieve the results you need at the price they quote, or worse that they will engage in ‘black hat’ SEO tactics to achieve short term results that can often lead to a negative outcome in the long term . The challenge in choosing a reputable search engine optimization company is compounded by the fact that many are required to keep their client rosters confidential, making it difficult to publicize case studies and references.

January 27, 2010 Winston-Salem, NC Furthering their ongoing effort to provide useful information and tools to site owners, Lil Engine now offers a certification for SEO Companies. OSC, which stands for Organic Search Certified, is a vetting process to verify the professionalism and quality of a company’s SEO techniques. Unfortunately, in the SEO industry there are a significant number of businesses that either knowingly or unknowingly are not performing quality SEO work. Concerns when choosing an SEO company range from whether or not they can achieve the results you need at the price they quote, or worse that they will engage in ‘black hat’ SEO tactics to achieve short term results that can often lead to a negative outcome in the long term . The challenge in choosing a reputable search engine optimization company is compounded by the fact that many are required to keep their client rosters confidential, making it difficult to publicize case studies and references.

An OSC certified company has been independently analyzed and examined to provide an additional level of reassurance that the company offers value, quality, and ethics. In order to receive Organic Search Certification from Lil Engine, top SEO companies must pass a thorough company analysis including complete on-site and off-site examination of client referrals. OSC companies have:

SEO companies benefit from the certification by being given a company listing on Lil Engine, and by being able to display a verifiable banner in their own marketing materials. A company can apply for certification at http://www.webmoves.net/blog//seo-certification-evaluation-criteria/.

Contact:

Jen Thames

admin@lilengine.com

Lil Engine is a free comprehensive resource for webmasters and site owners sharing internet marketing information. Offerings include useful SEO tools, an SEO blog, a database of expert guides, forum, and directory.

Face it: even if your website is a personal blog on a topic that you are passionate about, and isn’t an e-commerce site, it’s still dispiriting to look at your stats and find that only half a dozen people have visited. Everybody wants their site to get noticed. And there are enough web users that no matter what the topic of your site, there are plenty of people out there who are interested in it. What your site needs is probably some search engine optimization (SEO) to bump the numbers up and get more eyeballs on your page.

Ultimately, the success of a site depends on your ability to effectively communicate with readers. There is no substitute for content that people like, though there are things you can do to tip more traffic your way. A lot of people with blogs (whether they are designed to drive traffic to e-commerce sites or not) discover that there are a number of SEO plugins that can automatically do some of the SEO work for them. These plugins, such as Google XML Sitemaps, help the major search engines index your site better. With a sitemap, the crawlers have a much easier time “seeing” the structure of your site and retrieving it efficiently. Google XML Sitemaps notifies all the major search engines whenever you post new content.

With plugins, however, there can be too much of a good thing. More than four or five plugins and your site may slow down, and in some cases plugins can crash each other, in which case neither is helping your site. Not all SEO tools are plugins, however, and there are a number of SEO tools that help you do those things that you may not be so sure about doing, like generating meta tags.

With plugins, however, there can be too much of a good thing. More than four or five plugins and your site may slow down, and in some cases plugins can crash each other, in which case neither is helping your site. Not all SEO tools are plugins, however, and there are a number of SEO tools that help you do those things that you may not be so sure about doing, like generating meta tags.

That said, since so many new webmasters use WordPress for hosting, here are a few of the more popular plugins for WordPress SEO and what they do.

SEO Smart Links automatically links the keywords in your posts to corresponding posts and pages. This is awesome because it provides a way to keep visitors on your site and clicking those page views. In addition to the automatic functions, you can specify custom keywords and the URLs you want them to link to.

Robots Meta is a simple plugin that lets you ad meta tags to hide comment feeds from the search engines, hides internal search result pages from search engines, and hides admin pages from search engines, thus keeping your SEO info from being “diluted” by these things.

HeadSpace is a popular plugin that is capable of automatically generating post tags from a post’s content. This is terrific for people who have a hard time categorizing and tagging posts.

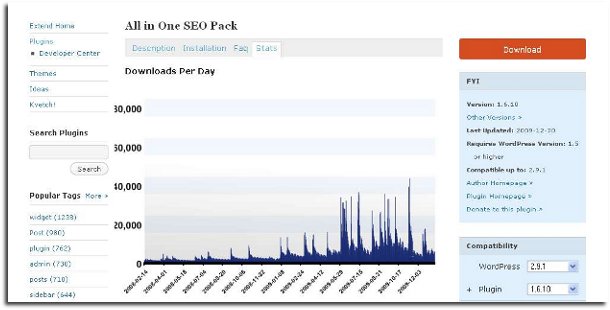

All In One SEO Pack is the most popular of the WordPress repository of plugins. It allows you to optimize parts of your site like the site title, the keywords, and the page titles. It can also generate keywords based on content of the current post. As you can see from the screen shot, this is extremely popular – at times being downloaded over 40,000 in a day!

One thing you need to do to be able to optimize your site is to see how it looks to the search engine crawlers. You also want to be able to generate meta tags with some help for optimum results. It is good to see if you have duplicate (or nearly duplicate) pages on your site (which can harm your search engine ranking), and to see if anyone else out there is stealing your content (and readers). Here are a few tools that you might consider.

Similar Page Checker, by webfconfs.com helps you determine if your web page content is too similar to another page on the same website. Search engines will ding you for having pages that are too similar on your website. All you do is enter the URLs and Similar Page Checker compares the content.

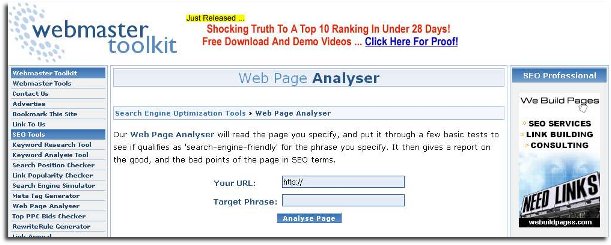

Webmaster Toolkit’s Web Page Analyzer checks your page to ensure that you have keywords in bold text, header text, link text, document titles, meta keywords, and meta descriptions. This is considered an “entry level” tool, but it may be perfect if you’re just starting out with SEO for your site. As you can see from the screen shot, it couldn’t be simpler: you enter URLs and keywords and Web Page Analyzer checks for optimization.

Code to Text Calculator by Stargeek tells you the ratio of text to code. The higher the ratio of text to code on a page, the better. You enter URLs and it calculates the code to text ratio.

Copyscape is a workhorse tool that helps you find websites that are ripping off your content. It also finds websites that quote you. The copyscape website has information about what you should do if you find another site taking your content without permission.

If you find out that one of your URLs is banned by the Google database, you’ll probably be angry about it, but it’s better to know than not to know. Google Banned Checker Tool 1 lets you know if your URL is in the Google database. Keep in mind that if your site is new, it may not show in the Google database, but that doesn’t mean you’ve been banned. This toolkit is primarily for people who think their site has been punished by the Google gods.

Cache.it by cached.it shows you a lot of information you probably don’t know about your site, like your IP address, meta tag text, Alexa ranking, ping time, and PageRank.

Meta Tag Generator by SearchBliss does exactly what it says, creating up to nine meta tags.

Another “spider-view” tool is Search Engine Spider Simulator by webconfs.com. It shows you what your site looks like to the web spiders.

SEO isn’t just in the purview of big web companies. You can use SEO tools for whatever kind of site you have, whether you’re selling fishing tackle or reviewing movies. There are a lot of tools available to help you get your site indexed and seen by a lot more people, and these tools are worth your while.

Submitting articles to article directories is what is known as “off page optimization.” Off page optimization is doing what you can to drive traffic to and create back links to your actual web pages. The best search engine optimization involves both on page and off page optimization. Submitting articles to directories won’t help much if your pages have little or no useful content, or if your pages themselves have not been optimized through the use of keywords, meta tags, and anchor text links. But once you have your pages in top shape, submitting articles to directories can definitely give your website’s traffic a boost.

Articles promoting your website should soft sell your site. In fact, many article directories have strict rules about how many so-called self serving links you can include in your articles. That’s because people don’t look for articles in order to be sold a piece of software or a new nutritional supplement: they read articles to gather information. Your job is to provide that information and also provide a way for the article reader to get to your site if he or she so chooses.

Even the article directories that are quite strict about links to your website in the content do provide “about us” boxes or “resources” boxes where you can include links to your site, and you should definitely do this. Anyone interested in the article content enough to want more will be looking to the resources box to link to more information. Because there are so many free article submission directories, you have plenty of outlets for articles about your site. You won’t have a huge influx of traffic overnight, but over time, your PageRank will increase, as will your traffic. Below are several of the advantages and a few disadvantages to directory submission of articles.

As helpful and useful as article directory submission is, there are a few downsides to it.

As an example, look at the first screen shot, where I have entered three woodworking terms into the entry box: woodworking tools, sanding blocks, and table saws. In the second screen shot you see just part of a long list of suggested keywords along with their relevance and search volume. At first glance it looks like the terms “woodworking hand tools,” “antique woodworking tools,” and “woodworking tool” could be good keyword candidates because they’re highly relevant, and the search volume is high, but not so high that I can’t work my way past the competition.

The moral of the story is: when you’re writing articles for submission to articles directories, ignore keywords at your peril. And, just FYI, the top five articles directories, according to vretoolbar.com and based on Google PageRank and Alexa Ranking are as follows: ezinearticles.com (Alexa=131; PR=6), articlesbase.com (Alexa=451; PR=5), buzzle.com (Alexa=1305; PR=5), goarticles.com (Alexa=1601; PR=6), and helium.com (Alexa=1872; PR=6).