Avoiding Infinite Space for the GoogleBot

It may sound like a phrase taken out of Stephen Hawking’s books, but “infinite space” on the web is simply defined as “very large numbers of links that usually provide little or no new content for Googlebot to index.” Needless to say, it’s a bad thing, and can lead to bandwidth congestion issues, or worse – Googlebot may not be able to index your site’s content completely.

A good example are calendars, which usually have a “Next Month” link. Since time is (almost) infinite, it’s possible to crawl through these links successively, onto a faraway future date, without much usuable content getting indexed. In effect, the search spider got trapped in “infinite space” instead of getting on to index your other content-filled pages. Now, Googlebot is smart, but apparently not smart enough just yet. It can figure out some of these types of URLs on its own, but it has its limits, and therefore needs human intervention some of the time.

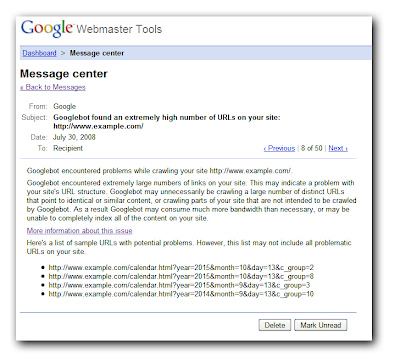

Website owners will be notified through the Webmaster Tools Message Center if Google detects this problem, so try to check on it when you can.

If you happen to receive the message above, take heart because it can be corrected. According to Google:

1. One fix is to eliminate whole categories of dynamically generated links using your robots.txt file. The Help Center has lots of information on how to use robots.txt. If you do that, don’t forget to verify that Googlebot can find all your content some other way.

2. Another option is to block those problematic links with a “nofollow” link attribute. If you’d like more information on “nofollow” links, check out the Webmaster Help Center.

But what I’d really like to happen is for Google to improve its bot, because things like this should just be invisible to site owners, who have other things to think about.