Set-up and Use of Google Webmaster Tools

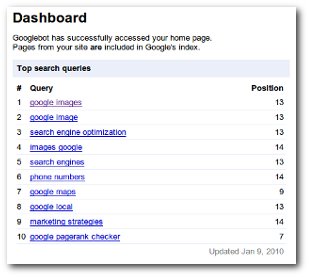

Google Webmaster Tools is a free service provided by Google to help new and experienced webmaster check on their indexing and raise the visibility of their website(s). Among the tools in Google Webmaster are those that check the crawl rate, list sites linking to the user’s site, determine what keyword searches on Google bring the user’s site into the search engine results pages (SERPs), determine click-through rates of SERP listings, show statistics on how Google indexes the user’s site, create a robots.txt file and submit sitemaps.

The range of tools offered on Google Webmaster Tools can help webmasters significantly raise their profile by search engine optimization (SEO), and traffic generation. To make Google Webmaster Tools work with your particular site, you have to install a snippet of code into your site. Google Webmaster Tools make a great partner to Google Website Optimizer as far as getting your website as prominent as possible.

If, for example, your site has a page in which you offer your visitor some free report or software in exchange for their email address, you could use the Google Website Optimizer to test two versions of that page to see which one converts best. This lets you streamline your SEO efforts and cuts back on some of the trial and error part of it.

If, for example, your site has a page in which you offer your visitor some free report or software in exchange for their email address, you could use the Google Website Optimizer to test two versions of that page to see which one converts best. This lets you streamline your SEO efforts and cuts back on some of the trial and error part of it.

Google Webmaster tools was launched in 2006 to help webmasters create sites that are friendlier to search engines. There is also the Google Webmaster Tools Access Provider Program. It lets domain hosts use Google’s application programming interface (API) to get their customers up and running with Google Webmaster Tools.

There are many steps you can take to help Google find, rank, and index your site. They can be classified as Design and Content Guidelines, Technical Guidelines, and Quality Guidelines.

Design and Content Guidelines

Every site needs a clear hierarchy and text links. Each page needs to be reachable from one or more static text links.

The sitemap should give visitors links to the important pages of your site.

- Make sure your site has good content, and make sure pages accurately describe the content.

- Think of the words visitors probably type to find your site and include those actual words in your content.

- Important names, links, or content should be in written text rather than pictures. the Google crawler doesn’t “see” text contained in images.

- Have <title> elements and ALT attributes that are accurate and descriptive.

- Look for dead links and improper HTML

- Keep links per page under 100

- Give images descriptive filenames and surround them with descriptive text.

- Text embedded in images is “invisible” to the image bot.

Technical Guidelines

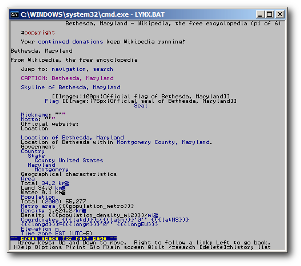

Try out a browser like Lynx to look at your site. Most search crawlers “see” your site like Lynx does. If your site is overloaded with JavaScript, session IDs, Flash, or cookies, the search crawlers may not find your site very navigable.

Try out a browser like Lynx to look at your site. Most search crawlers “see” your site like Lynx does. If your site is overloaded with JavaScript, session IDs, Flash, or cookies, the search crawlers may not find your site very navigable.- Check that your web server allows the “if-modified-since” HTTP header. It lets the web server tell Google whether or not content has changed since the last crawl. It saves you valuable bandwidth and overhead.

- Make sure the robots.txt file on your server is current so it doesn’t block the crawler. You can test your robots.txt file using the robots.txt analysis tool in Webmaster Tools.

- Check your site’s appearance in different browsers to familiarize yourself with what your visitors might see.

Quality Guidelines: A List of Don’ts

Before listing the specific practices to avoid, don’t assume that just because a particular practice isn’t listed that it’s OK. If you’re using trickery like registering common misspellings of popular sites, Google might well penalize you in the search engine results pages (SERPs) or ban you altogether. Ultimately, looking for loopholes and technicalities may not serve you well.

- Don’t use cheap tricks that might improve your SERP ranking. How would you feel about your competitor doing the same?

- Don’t participate in paid link systems or link farms in an attempt to increase your SERP ranking or PageRank. Avoid

- links to “bad neighborhoods” on the web. It could adversely affect your ranking.

- Don’t use hidden text, hidden links, “cloaking” practices or questionable redirects.

- Don’t use automated queries with Google

- Don’t cram pages with keywords that aren’t relevant to your site.

- Don’t create a bunch of pages, domains, or subdomains that all have basically the same content.

- Don’t create pages with malware like viruses, trojans, and phishing.

- Don’t make doorway pages just for the search engines that have no real content.

When you believe your site is ready and follows all the design, technical, and quality guidelines (especially the latter), then you can submit it to Google at www.google.com/addurl.html. You should also use Google Webmaster Tools to submit a sitemap. A sitemap helps Google cover your webpages more effectively.

Starting up a website can be hard work, particularly if you’re new to the world of style sheets and HTML. It isn’t enough to simply create a great looking site filled with interesting content and wait for people to discover it. Particularly if you have an e-commerce site or a blog from which you want to earn money, you need to make your site as friendly as possible to search engines. Google Webmaster Tools are specifically for this purpose: getting your website the notice that it deserves.

While it may be tempting to take shortcuts like buying a bunch of back links, it can be bad for your site in the long run. The best way to build your site’s following is to submit a sitemap, and follow the guidelines listed above for proper ways to promote your site. And, of course, the ultimate “tool” for getting your site popular and keeping it popular is to provide fresh, rich content on a regular basis.